Gazebo harmonic nodes

Index

- Helping with gazebo harmonic

- RA #issue-2717

- Testing Digit-Classifier

- Testing Unibotics

- Trying to fix reset error

Helping with gazebo harmonic

The first contact has been based on investigating a bit the new changes of gazebo harmonic as well as its migration problems in ros2-humble. After this first contact, I was investigating the problem they have encountered. The reset of the exercise does not work properly.

Small bug solved:

1

2

3

x = t.transform.translation.x

y = t.transform.translation.y

z = t.transform.translation.z

After trying to create a miniradi to start testing, my computer crashes.

1

sudo ./build.sh -f -a prajyot/migration_to_gz_harmonic -i dduro2020/test_harmonic -m prajyot/migration_to_gz_harmonic -r humble -t test_gz_harmonic

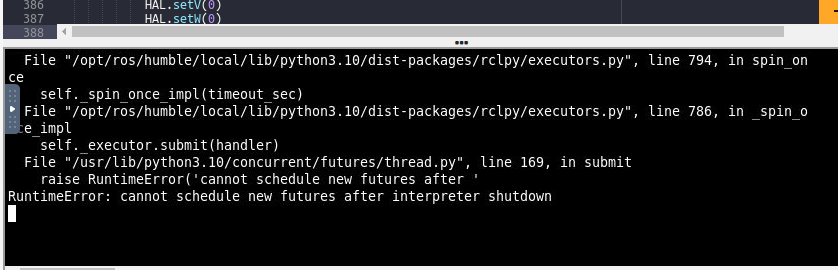

Trying to fix reset error

When you enter the exercise, several nodes start their execution, all of them named /drone0… Once you press run, a new node is launched, the droneWrapper object node. I’ve tried creating a shutdown service for the node, killing it manually, killing it by force using kill…. nothing worked. It’s true that the service never worked properly for me, you can take a look at my branch: https://github.com/JdeRobot/RoboticsInfrastructure/blob/dduro2020/test_harmonic/jderobot_drones/jderobot_drones/drone_wrapper.py The manager.py looks like this:

1

2

3

4

5

6

7

8

9

10

11

12

def reset_sim(self):

if "noetic" in str(self.ros_version):

rosservice.call_service("/gazebo/reset_world", [])

elif self.visualization_type == "gzsim_rae":

# self.call_gzservice("$(gz service -l | grep '^/world/\w*/control$')","gz.msgs.WorldControl","gz.msgs.Boolean","3000","reset: {all: true}")

self.call_gzservice("$(gz service -l | grep '^/world/\w*/set_pose$')","gz.msgs.Pose","gz.msgs.Boolean","3000","name: \"drone0\", position: {x: 0, y: 0, z: 1.375}")

else:

self.call_service("/reset_world", "std_srvs/srv/Empty")

print("INFO: KILLING NODE")

self.call_service("/drone0_interface/shutdown", "std_srvs/srv/Trigger")

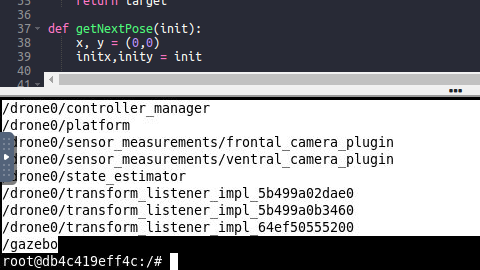

The node that is created is called /drone0/drone0_interface. The tests I did after I managed to kill it manually, showed that for some reason the state machine error was still present. There are two more nodes that I think may be interfering, one is called /drone0/state_estimator and the other /drone0/controller_manager. I killed both nodes and they were instantly recreated with different pids, I really don’t understand it at all.

Picture of the nodes that are launched as soon as you enter the exercise. Note that the /drone0/drone0_interface node is not present because it is created once you press run, in fact it is the HAL.py who creates it.

RA #issue-2717

The main problem here was that when launching the script for developers, the docker was launched, but the script was stopped, for this reason, when using ctrl+c, the script and the docker were killed, making the script never execute its last lines (created to clean the remains of the docker). A patch was created that cleaned up any stalled docker before running a new one, but this still didn’t fix the main bug.

To fix it we simply added an interrupt that waits for the ctrl+c call and executes a function that cleans up the dockers.

1

2

3

4

5

6

7

8

9

10

11

12

13

# Function to clean up the containers

cleanup() {

echo "Cleaning up..."

if [ "$nvidia" = "true" ]; then

docker compose --compatibility down

else

docker compose down

fi

rm docker-compose.yaml

}

# Set up trap to catch interrupt signal (Ctrl+C) and execute cleanup function

trap 'cleanup' INT

Testing Digit-Classifier

After creating the image, it still does not work due to some errors.

Testing Unibotics

For the tests, the instructions of unibotics were followed. After downloading the ‘latest’ rb image. The docker is launched and the tests are performed. After checking the correct functioning of most of the exercises, the follow person exercise had an error.

At the end a new image had to be generated as the latest image had an error.