Coding Period: Week 8

Preliminaries

We have explored a variety of optimization strategies available with Tensorflow framework. In last week, I aggregated all the results and also did benchmarking via simulation. For the rest of the coding period, we decide to move forward with PyTorch optimization strategies. It would provide comprehensive optimization methods to all DL users. Additionally, I tried to benchmark previous TF-TRT models on other circuits such as Montreal. And finally I put all the results in a new single page and will keep updating the new results there.

Objectives

- Research on optimization strategies in PyTorch

- Prepare pytorch script for benchmarking optimized models

- Implement Dynamic range quantization

- TF-TRT Simulation on additional circuits

- Create additional page for all results

Related Issues and Pull requests.

Related to use BehaviorMetrics repository:

Code repository:

Important links

- Results page - https://theroboticsclub.github.io/gsoc2022-Nikhil_Paliwal/gsoc/Results-Summary/

- BehaviorMetrics simulations - https://drive.google.com/drive/folders/1ovjuWjSy-ea7YtgnaSsgVsHnbo0HJY1A?usp=sharing

- Trained weights - https://drive.google.com/drive/folders/1j2nnmfvRdQF5Ypfv1p3QF2p2dpNbXzkt?usp=sharing

Execution

Available strategies and guide

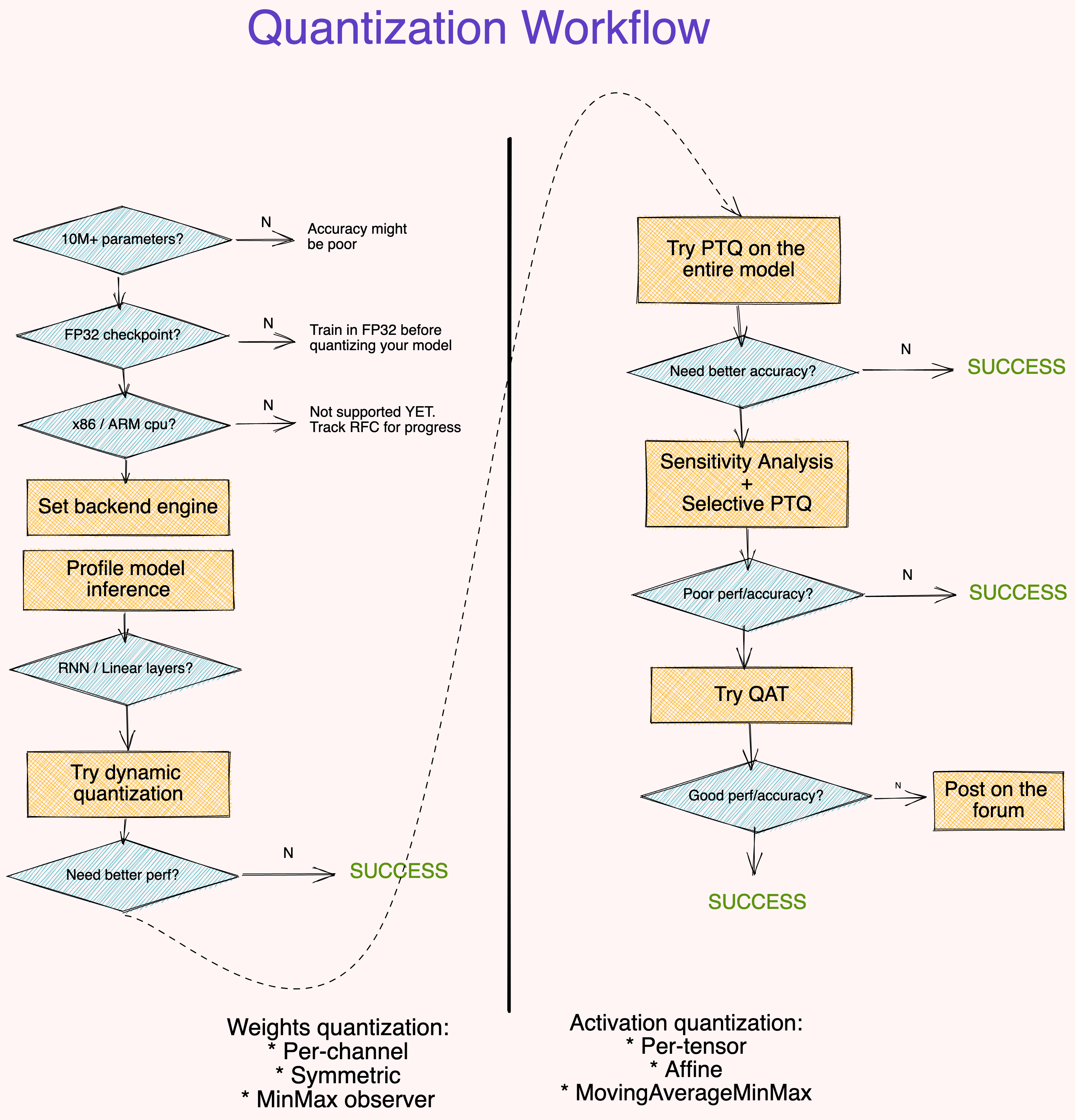

There is an amazing blog by PyTorch developers educating on quantization strategies 4. They have provided example codes for available strategies and methods to analyze the models. Moreover, they have also provide a workflow recommendation as shown below:

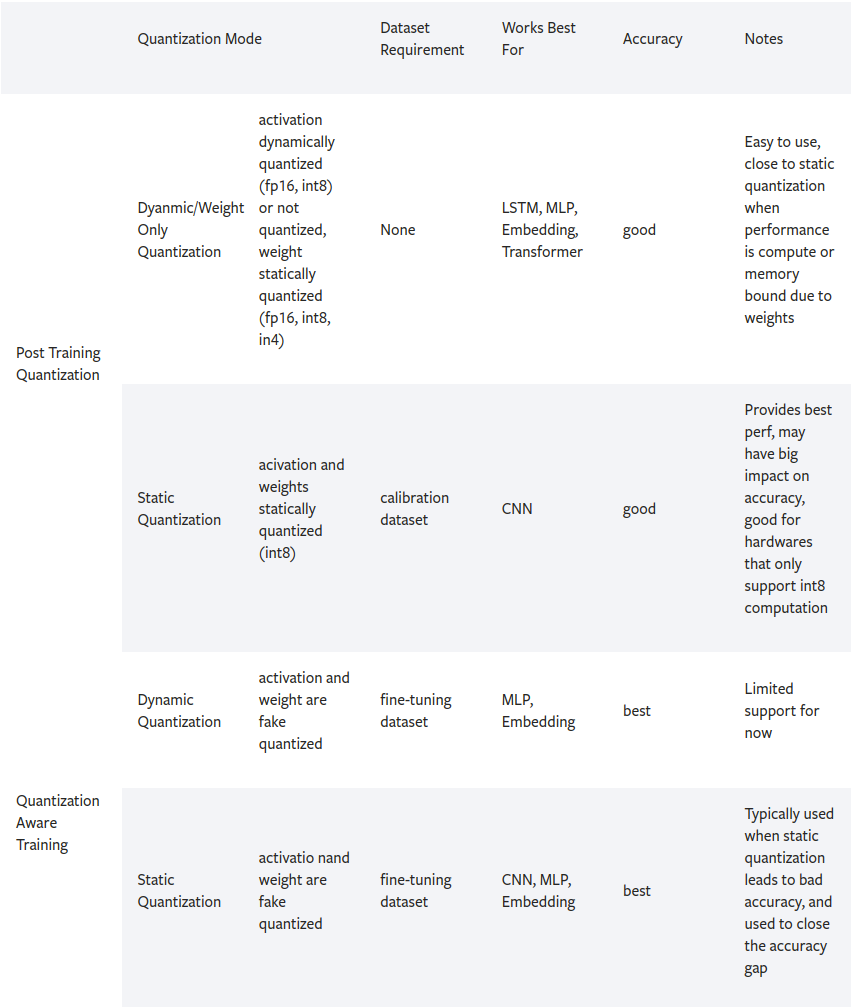

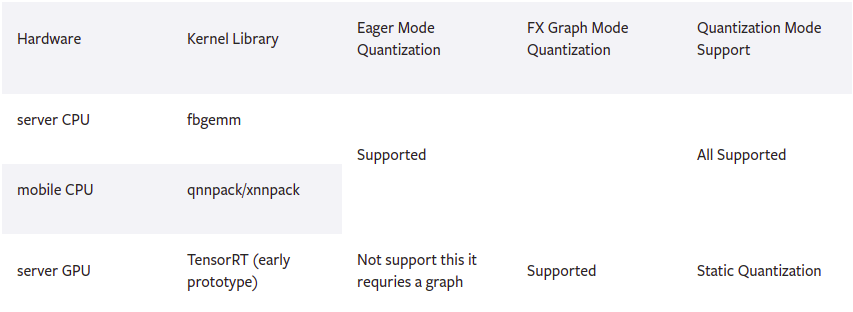

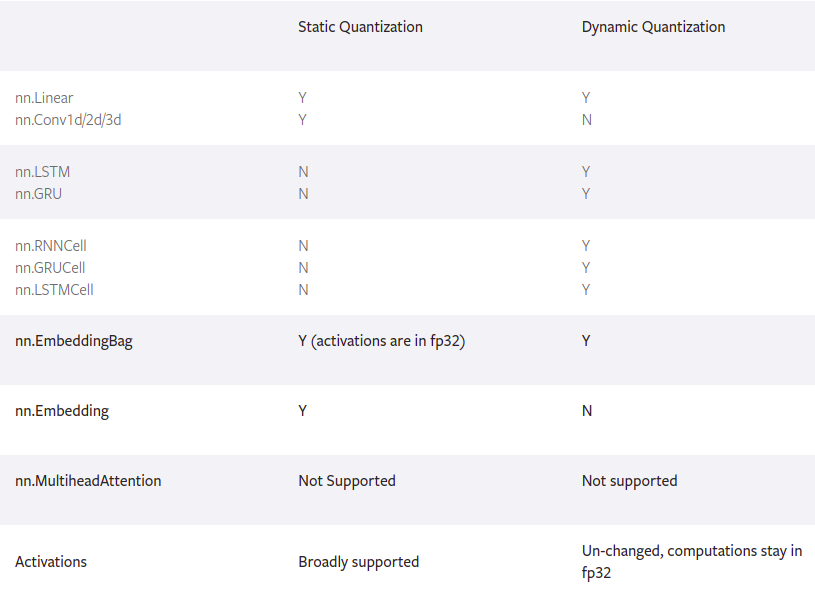

The official documentation on Quantization in 5 present addtional important aspects to be considered.

Quantization Mode Support

Backend/Hardware Support

Operator Support

I have implemented basic benchmarking, dataset setup and dynamic range quantization technique. For storing and loading the models, I have used TorchScript 6. TorchScript is a way to create serializable and optimizable models from PyTorch code. Any TorchScript program can be saved from a Python process and loaded in a process where there is no Python dependency. The implemented code is present in the repository mentioned above.

Simulation with Montreal

There is an error, which I encountered during recording stats. I have created an Issue #392, which needs to be solved and I will target next. In the meeting, I got information about reasons causing the issue. The solution is to change the PerfectLap argument in the config file. The available options are present in BehaviorMetrics/behavior_metrics/perfect_bags/ directory.

References

[1] https://github.com/JdeRobot/BehaviorMetrics

[2] https://github.com/JdeRobot/DeepLearningStudio

[3] https://developer.nvidia.com/tensorrt

[4] https://pytorch.org/blog/quantization-in-practice/

[5] https://pytorch.org/docs/1.12/quantization.html

[6] https://pytorch.org/docs/stable/jit.html