Week 3: June 12 ~ June 18

Preliminaries

On Monday, we reviewed the performance of the model that we trained in Week 2. The model surpassed our initial expectations, demonstrating a surprising ability to follow the correct lane, pause when encountering vehicles upfront, and resume driving once the path ahead was clear. However, the model also showed a tendency to occasionally stop unnecessarily or collide with other vehicles.

While the model passed most of our test scenarios, two significant limitations came to the forefront. 1. the model’s navigation abilities were limited to straight routes without intersections. 2. the testing and evaluation of the model were conducted exclusively in Town01, the same map where the training took place.

Moving forward into Week 3, we aim to overcome these limitations. Our objective for this week is to enhance the model to become a more versatile lane follower — one that can navigate through diverse routes, including those with turns. Additionally, we plan to commence the evaluation of our models in an unseen environment. By testing the model on a new map, we can further ensure its ability to generalize and adapt to novel scenarios.

Objectives

- Experiment with removing the irrelevant part from segmentation and RGB images

- Experiment with affine transformations in addition to noise injection

- Try routes with turns and intersections

- Add more obstacles such as pedestrians

- Explore which types of vehicles are present in the dataset

- Develop evaluation metrics

Execution

CARLA AgentAPI → Traffic Manager

Previously we had been using CARLA’s Behavior Agent to generate expert demonstrations for our imitation learning algorithm. The first problem we had to deal with was that the CARLA Behavior Agent was not able to reliably make turns without running into obstacles such as pavements or street light poles. There are several issues on CARLA’s github repo page reporting various similar problems with the Behavior Agent. The general advice from the community is to use the server-side autopilot, which is coordinated by the Traffic Manager, instead of the Agent API. The Traffic Manager offers more reliable and robust driving behavior, making it a better choice for our data collection process. Thus our first step this week was to re-implement the data collection tool to leverage the Traffic Manager for controlling the ego-vehicle during data collection.

Turning Decisions

Fortunately, starting from CARLA version 0.9.13, the Traffic Manager now supports setting routes and paths for individual vehicles, which makes it possible for us to conveniently set the agent to always turn right at intersections to simplify the turning decisions. In our current setup, we have strategically selected testing routes that enable the ego-vehicle to reach its target destination by always making right turns at intersections. By adopting this approach, we can focus on training our agent to learn how to make turns without the need for a complex global navigator.

Traffic Light

After switching to server-side autopilot by the Traffic Manager and training models on the new data, we realized that the trained agent tends to halt indefinitely in front of the traffic light regardless of its status. To address this issue, we implemented a simple workaround following codevilla et al.[1]: during data collection, we instructed the autopilot expert agent to ignore the traffic lights altogether. By doing so, we ensured that the agent would continue moving without halting at red lights. However, it is important to note that other vehicles on the map still adhere to traffic rules, including waiting for the traffic lights to turn green before proceeding. As a result, if there is a line of vehicles ahead of our expert agent at an intersection, it will need to wait for those vehicles to start moving before it can proceed safely. This workaround allows us to collect data effectively while still considering the behavior of other vehicles on the road. Our expert agent can navigate through intersections smoothly by taking into account the actions of surrounding vehicles.

Affine Transformation

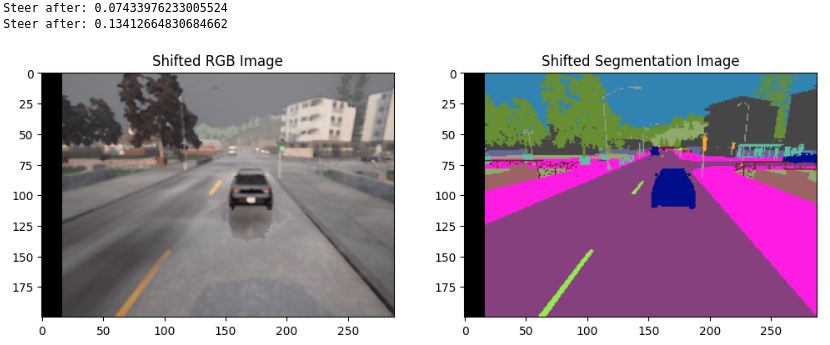

Besides noise injection during the data collection process as described in previous week’s post, we experimented with applying an affine transformation to images during training. The concept behind this transformation is to shift the images slightly to the left or right, simulating real-world scenarios where the vehicle may experience lateral displacement on the road. Note that we also adjust the recorded steering angle in the opposite direction of the image shift. This ensures that the model learns to compensate for the lateral displacement and effectively navigate the road, maintaining a consistent trajectory.

Class Filtering

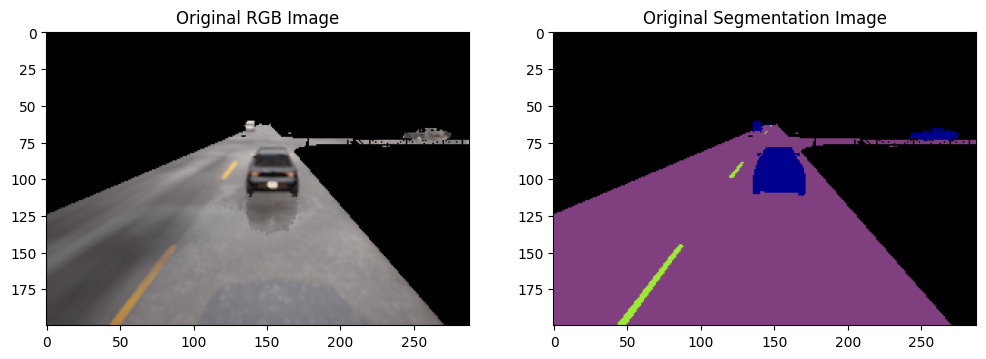

We also experiemented on masking out everything in the RGB and segmentation images except the vehicles, pedestrians, roads, and road lines. The image below depicts one example: (ignore the “Original” in the titles)

Models

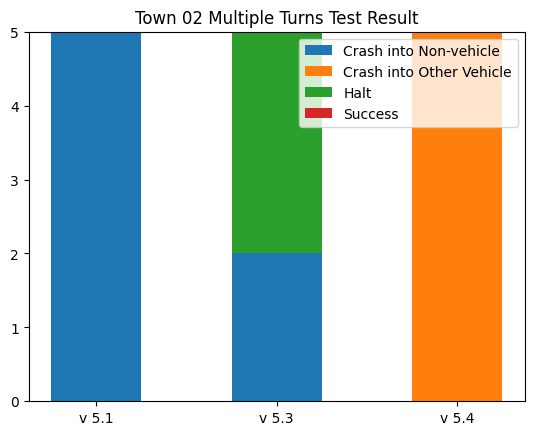

We trained and compared three models:

- v5.1: follow traffic lights and signs, noise injection (as described in previous week’s post)

- v5.2: ignore traffic lights and signs, noise injection

- v5.3: ignore traffic lights and signs, noise injection + affine transformation + class filter

Evaluation

We evaluated the three models in Town02, a map unseen to the model during training, and compared their performance. There are 5 testing routes, each consisting of multiple intersections where the agent need to make a right turn. The table belows shows the mean distance traveled before collision in failed cases and the standard deviation.

| Model | Mean (m) | SD (m) |

|---|---|---|

| v5.1 | 45.60 | 50.75 |

| v5.3 | 135.05 | 72.16 |

| v5.4 | 166.7 | 123.21 |

Demo

References

[1] Felipe Codevilla, Matthias Müller, Antonio López, Vladlen Koltun, & Alexey Dosovitskiy. (2018). End-to-end Driving via Conditional Imitation Learning.

Enjoy Reading This Article?

Here are some more articles you might like to read next: