Coding week6 7/01-7/07

Weekly Meeting

Our follow-up meeting on June 26 continued addressing critical issues. I led a discussion on unbalanced datasets and implemented simple and complex instructions. We explored the use of a BERT model for instruction classification, agreeing on the importance of dividing datasets into training and testing sets to ensure robust metrics. A key outcome was removing duplicated lines from datasets using iterative generation techniques. We plan to integrate the BERT model with the refined dataset and incorporate it into the overall framework to establish our first baseline. Sergio proposed two approaches: utilizing Qi’s model for action decoding and aiming towards a step forward with the LMDrive model.

More details can be found here: Google Doc

Sample Route

The sample_route function randomly selects a route from the provided episode_configs file. Let’s break down this function step by step to understand how the route is generated and what it contains.

First, let’s look at the code for the sample_route function:

def sample_route(world, episode_configs):

spawn_points = world.get_map().get_spawn_points()

episode_config = random.choice(episode_configs)

start_point = spawn_points[episode_config[0][0]]

end_point = spawn_points[episode_config[0][1]]

logging.info(f"from spawn point #{episode_config[0][0]} to #{episode_config[0][1]}")

route_length = episode_config[1]

route = episode_config[2].copy()

return episode_config, start_point, end_point, route_length, route

The given episode_configs file contains multiple lines of data, each representing a route and its associated high-level commands. Each line follows this format:

Start Index End Index Route Length High-Level Command1 High-Level Command2

For example, the first line of data is:

24 79 158 Right Right

This means:

- Start Index: 24

- End Index: 79

- Route Length: 158

- High-Level Commands: Right, Right

Contents of the route

The route contains a series of high-level commands, which are the vehicle’s turning instructions at intersections along the route. For example, the route value might be ['Right', 'Right'], indicating that the vehicle should turn right at the first intersection and right again at the second intersection.

In the subsequent simulation process, these return values will be used to initialize the vehicle’s position, determine its travel route, and guide the vehicle’s turns at intersections through high-level commands.

Why Use Imitation Learning?

In the paper “End-to-end Driving via Conditional Imitation Learning,” the authors address the limitations and challenges of imitation learning for autonomous urban driving. The primary motivation for this work is to overcome the inherent difficulties in mapping perceptual inputs directly to control commands, especially in complex driving scenarios.

Imitation learning aims to train models by mimicking human actions, which in theory, should enable the model to perform tasks similarly to how a human would. However, this approach faces several significant challenges:

Optimal Action Inference:

The assumption that the optimal action (a_t) can be inferred solely from the perceptual input (o_t) is often flawed. As highlighted in the text, this is particularly problematic when a car approaches an intersection. The camera input alone is insufficient to predict whether the car should turn left, right, or go straight. This scenario illustrates that additional context or commands ((c_t)) are necessary for accurate decision-making.

Function Approximation Difficulties:

The mapping from image to control command is not always a straightforward function. This complexity is exacerbated in real-world environments where multiple plausible actions can exist for a given situation. For instance, as mentioned in the paper, when a network reaches a fork, it may output two widely discrepant travel directions, one for each possible choice. This results in oscillations and instability in the dictated travel direction.

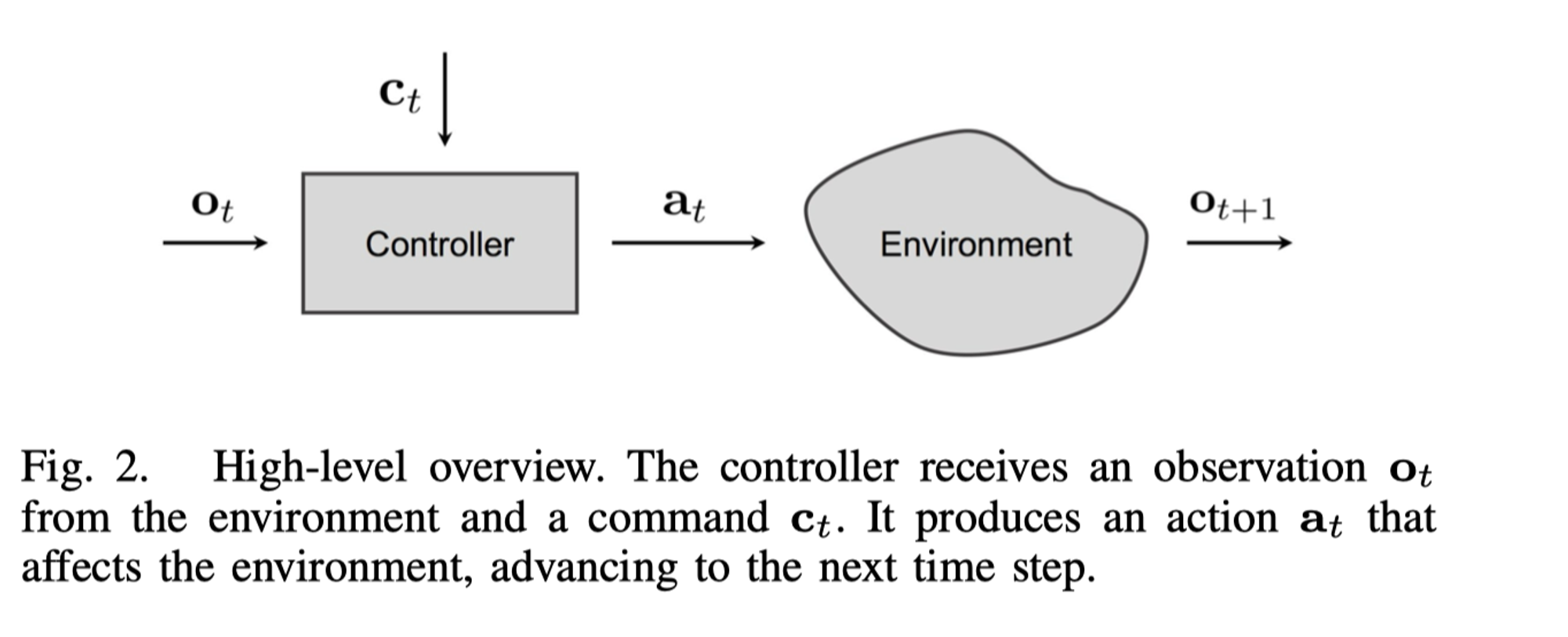

The high-level overview in this Figure demonstrates the interaction between the controller and the environment:

- Observation ((o_t)): The controller receives observations from the environment, which are the sensory inputs, such as images from cameras.

- Command ((c_t)): The controller also receives high-level commands that provide context, such as “turn left” or “go straight.”

- Action ((a_t)): Based on the observation and command, the controller produces an action that affects the environment.

- Next Observation ((o_{t+1})): The action results in a change in the environment, leading to the next observation.

The figure underscores the necessity of combining both perceptual inputs and high-level commands to generate appropriate actions.

HighLevelCommandLoader

The HighLevelCommandLoader is designed to provide turn-by-turn instructions for a vehicle navigating through intersections. These instructions can either be predefined or selected randomly based on real-time intersection detection.

Example Configuration

24 79 158 Right Right

77 1 166 Left Left

32 75 174 Right Straight

76 33 193 Straight Left

52 59 148 Left Right

69 51 136 Left Right

40 69 272 Straight Left

59 39 245 Right Straight

60 25 255 Straight Right

23 61 269 Left Straight

82 17 155 Right Left

18 83 140 Right Left

Route Initialization:

When initializing HighLevelCommandLoader, the route is provided:

hlc_loader = HighLevelCommandLoader(vehicle, world.get_map(), route)

Fetching High-Level Commands:

In each simulation step, the next high-level command is fetched:

hlc = hlc_loader.get_next_hlc()

Loading Next High-Level Command:

If the vehicle is at an intersection and there is a predefined route, load_next_hlc loads the next command:

hlc = self._command_to_int(self.route.pop(0))

If there is no predefined route, get_random_hlc randomly selects a command.

Command Mapping

Commands guide the vehicle’s behavior at intersections, ensuring it navigates according to the defined or random directions.

def _command_to_int(self, command):

commands = {

'Left': 1,

'Right': 2,

'Straight': 3

}

return commands[command]

Integration with BERT Model

-

Input Example:

"Make a left turn at the upcoming junction." -

Tokenization:

- The command is split into tokens and converted into a tensor of token IDs.

-

Prediction:

- The token IDs are passed through the BERT model.

- The model outputs logits for each possible action class.

Modified Command-to-Integer Mapping with BERT

def _command_to_int(self, command):

inputs = self.tokenizer(command, return_tensors="pt").to(self.device)

outputs = self.model(**inputs)

predicted_class = torch.argmax(outputs.logits, dim=1).item()

# Map predicted_class to corresponding actions

command_mapping = {

0: 1, # Left

1: 2, # Right

2: 3 # Straight

}

return command_mapping.get(predicted_class, 0)

Example Flow

- Input:

"Make a left turn at the upcoming junction." -

Processing:

- Tokenization:

- Split the command into tokens.

- Convert tokens into tensor IDs.

- Prediction:

- Pass tensor IDs through the BERT model.

- Obtain logits for action classes.

- Tokenization:

-

Actions:

- Mapping:

- Map predicted action class to predefined actions:

Left (1),Right (2),Straight (3).

- Map predicted action class to predefined actions:

- Mapping:

Data Mapping Example

Input Data:

24 79 158 Right Right

77 1 166 Left Left

32 75 174 Right Straight

76 33 193 Straight Left

52 59 148 Left Right

69 51 136 Left Right

40 69 272 Straight Left

59 39 245 Right Straight

60 25 255 Straight Right

23 61 269 Left Straight

82 17 155 Right Left

18 83 140 Right Left

Mapped Commands:

24 79 158 "Make a right turn at the upcoming junction." "Make a right turn at the upcoming junction."

77 1 166 "Make a left turn at the upcoming junction." "Make a left turn at the upcoming junction."

32 75 174 "Make a right turn at the upcoming junction." "Continue straight at the upcoming junction."

76 33 193 "Continue straight at the upcoming junction." "Make a left turn at the upcoming junction."

52 59 148 "Make a left turn at the upcoming junction." "Make a right turn at the upcoming junction."

69 51 136 "Make a left turn at the upcoming junction." "Make a right turn at the upcoming junction."

40 69 272 "Continue straight at the upcoming junction." "Make a left turn at the upcoming junction."

59 39 245 "Make a right turn at the upcoming junction." "Continue straight at the upcoming junction."

60 25 255 "Continue straight at the upcoming junction." "Make a right turn at the upcoming junction."

23 61 269 "Make a left turn at the upcoming junction." "Continue straight at the upcoming junction."

82 17 155 "Make a right turn at the upcoming junction." "Make a left turn at the upcoming junction."

18 83 140 "Make a right turn at the upcoming junction." "Make a left turn at the upcoming junction."

Annotated Example:

24 79 158 "Make a right turn at the upcoming stop sign." "Merge onto the highway and take the second right exit."

77 1 166 "Make a left turn at the second stop sign." "Take the left turn onto Main Street at the upcoming intersection."

32 75 174 "Make a sharp right turn onto Maple Street." "Continue straight on the highway for the next 10 miles."

76 33 193 "Continue straight on the highway for the next 10 miles." "Make a left turn at the upcoming stop sign."

52 59 148 "Make a left turn at the upcoming roundabout." "Make a right turn at the stop sign ahead."

69 51 136 "Make a left turn at the upcoming stop sign." "Make a right turn onto Main Street after the gas station."

40 69 272 "Continue straight on the highway for the next 10 miles." "Make a left turn at the upcoming roundabout."

59 39 245 "Merge onto the highway and take the next right exit." "Continue straight on the highway for the next 10 miles."

60 25 255 "Continue driving straight on this road for two more miles." "Make a right turn onto Maple Street after the stop sign."

23 61 269 "Make a left turn at the stop sign ahead." "Continue straight on the highway for the next 5 miles."

82 17 155 "Make a right turn onto Main Street after passing the gas station on your left." "Make a left turn at the upcoming stop sign."

18 83 140 "Make a right turn onto Elm Street after the bridge." "Make a left turn at the stop sign ahead."

Next Steps

- Retrain the BERT Model

- Install the Simulator

- Integrate and Test with the Simulator