Coding week8 7/15-7/21

Weekly Meeting

During this week’s meeting, the team discussed several key updates and future plans. Sergio and Apoorv reminded me to provide weekly updates on the project blog to track progress and evaluation. I recapped the data generation process, highlighting issues with high duplication and the implementation of strategies like dynamic batch size and the iterative method, which improved speed by 28%. However, scalability issues were noted. I also discussed the high-level command design for the demo, simulator installation challenges, and the retraining of the BERT model, which achieved 100% accuracy with 300 samples. Environmental issues were addressed, but data collection and iteration interruptions remained problematic. In the open floor discussion, the team explored future directions, such as using world models or simulators to improve data efficiency and considering external datasets. Sergio outlined the next steps, including integrating the new BERT model into the pipeline, publishing code and results, and potentially developing a Streamlit demo app.

More details can be found here: Google Doc

Setting Up CARLA with a Graphical Interface

Because we have been developing CARLA within Docker, setting up a graphical interface is essential for better visualization and simulation. This week, I attempted to establish this environment, initially assuming it would be relatively straightforward. However, this task proved to be quite time-consuming.

CARLA Basic Hardware Requirements

Before diving into the setup process, it is crucial to understand the basic hardware requirements for running CARLA smoothly:

- CPU: Intel i5-8600k or higher

- GPU: NVIDIA GeForce GTX 1080 or equivalent

- RAM: 16 GB

- Storage: SSD with at least 50 GB of free space

- Operating System: Ubuntu 18.04/20.04 or Windows 10/Server 2019

Initially, I planned to set up CARLA on headless servers. Here’s a summary of the challenges faced:

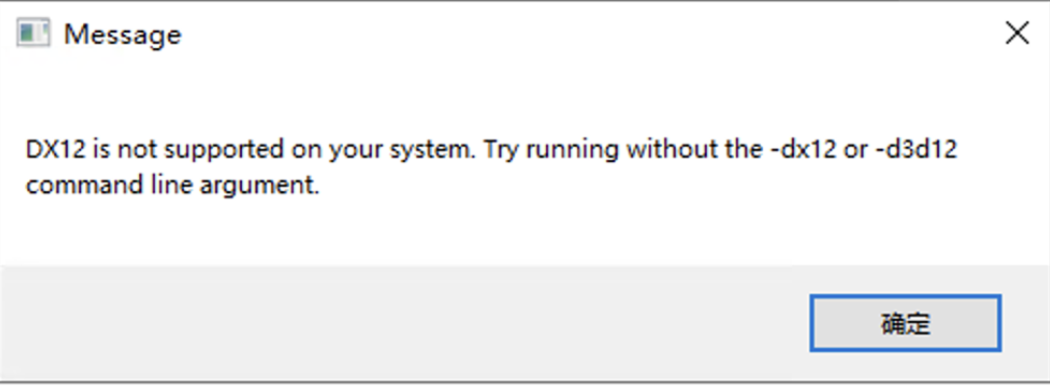

Windows Server 2019: The setup on a Windows Server 2019 system was expected to be straightforward. However, this older system caused numerous issues, leading to persistent errors as shown in the image below. These errors were likely due to compatibility issues with the older Windows version.

Ubuntu Server: Another attempt was made on an Ubuntu server. Unfortunately, due to being on a university’s internal network, port restrictions prevented the use of VNC for desktop access. This limitation made it challenging to run CARLA with a graphical interface.

Given these challenges, the only viable option was to run CARLA on a physical desktop machine. This approach allowed me to bypass the issues encountered with headless servers and internal network restrictions. Finally, here is a video of the trained model running on CARLA:

Model Training and Optimization

Over the past week, several improvements have been made to our model in performance and flexibility. These updates include:

-

Training with a New Dataset: The model was retrained using a new and more comprehensive dataset, which led to an improvement in prediction accuracy. The retraining process resulted in the model achieving nearly 100% accuracy, demonstrating the effectiveness of the new data.

-

Model Size Optimization: To optimize performance and resource usage, different versions of the model were tested, including

bert_model,distilbert_model, andtinybert_model. Each version showed significant differences in terms of speed and memory consumption. Despite these differences, all versions maintained a high level of accuracy. This experimentation highlights the potential for deploying smaller, more efficient models without compromising on performance. -

Code Refactoring: The codebase underwent extensive refactoring to improve maintainability. The model-related code was updated to fully accept parameters. Additionally, new testing interfaces were implemented, which enable both single instruction inputs and file-based inputs. These interfaces facilitate more testing and validation of the model under various scenarios.

These improvements enhance the model’s robustness, efficiency, and adaptability.

-rw-r--r-- 1 zebin staff 418M Jul 24 06:59 bert_model.pt

-rw-r--r-- 1 zebin staff 255M Jul 24 06:38 distilbert_model.pt

-rw-r--r-- 1 zebin staff 55M Jul 24 07:14 tinybert_model.pt

Model integration

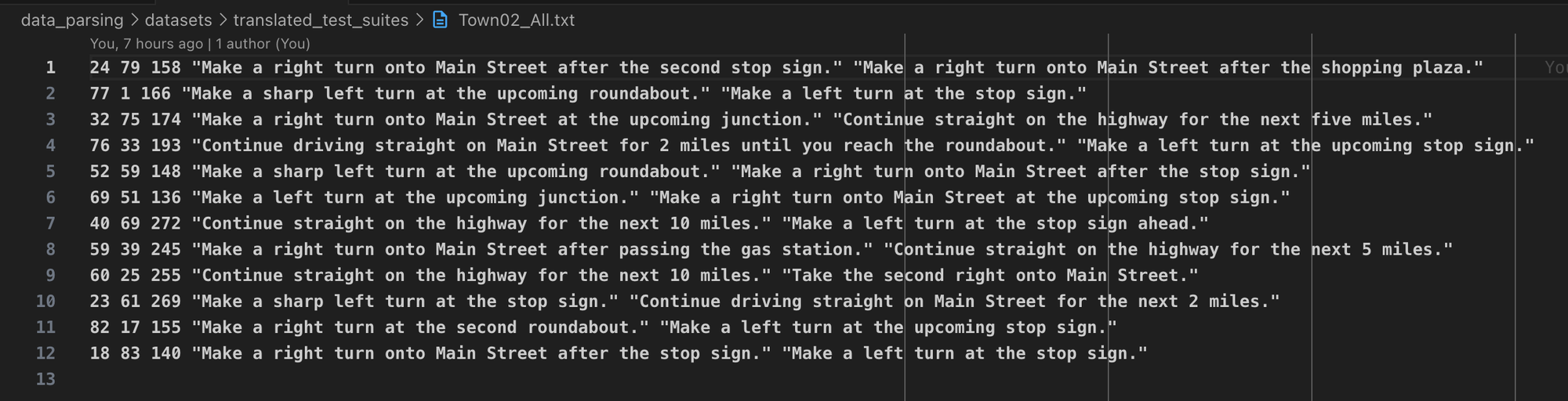

To independently verify that the LLM language module is functioning correctly, a config_translator was created. This tool uses the GPT interface to generate instructions based on some predefined actions found in the test_suites. Below are the generation results for Town02_All.txt.

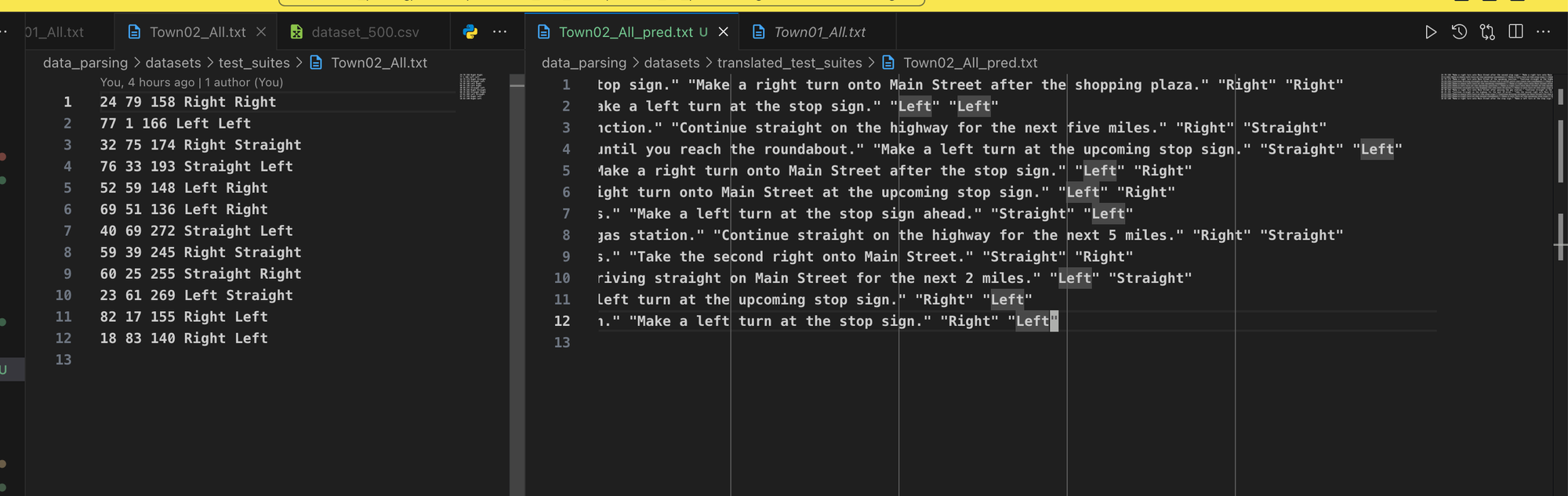

Two online testing methods for the model were established. One method involves testing through a single instruction, while the other involves testing through a configuration data file. Below are the model prediction results for Town02_All.txt (the last two actions on the right are model predictions). As you can see, the results are consistent.

Code Refactoring

Recent efforts in code refactoring have focused on improving robustness, flexibility, and maintainability. Here are the key improvements:

- Exception Handling: We have implemented exception handling across the codebase to manage potential errors more effectively. This includes:

- Invalid Configuration Formats: Ensuring that the system gracefully handles incorrect or malformed configuration files.

- Invalid Actions: Adding checks to handle scenarios where actions specified are not recognized or are outside the expected range.

- Non-existent Directories: Implementing safeguards to manage cases where required directories are missing.

-

Parameterization: All scripts have been fully parameterized, enhancing their flexibility and ease of use. Parameterization allows users to adjust script behavior without modifying the underlying code, facilitating.

- Configuration File and Utilities: To further improve code maintainability, we have introduced an independent configuration file and a set of utility functions:

- Configuration File: Centralizes all configuration settings, making it easier to manage and update system-wide settings in one place. This separation of configuration from code simplifies adjustments and reduces the risk of errors.

- Utility Functions (

utils): A dedicated set of utility functions has been created to handle common tasks and operations. Common operations are abstracted into these utility functions, which can be easily called from different parts of the project.