Coding week14 8/26-9/08

This week, we focused on exploring several potential research to create an “Ideas List”, which is a collection of possible research concepts. As we evaluate each idea in alignment with project goals, our approach includes balancing technical feasibility with anticipated resource needs and practical challenges.

This list includes ideas focused on recent advancements, integrations with LLMs, and potential research gaps with current works. During our review, we evaluated each idea based on several key factors to identify those that promise impact while remaining feasible given our technical and resource constraints. This post outlines the main points from our discussions, along with actionable insights and next steps.

Update

In addition to our research review and ideas list development, the following updates were made:

-

PRs Merged into Main Branch: Two pull requests, PR#3 and PR#5, were successfully merged into the main branch. PR#3 focused on the model with the CARLA simulation for testing and validation, while PR#5 addressed web-based streamlit app, packaged for online deployment using the Streamlit framework.

-

Social Media Posts: Posts were published on LinkedIn and Twitter featuring the latest video demonstration. (See LinkedIn post)

-

Documentation Update: The app documentation was revised and updated to clearly distinguish between the development version and the deployable web version.

Resource Availability

We examined the viability of implementing from both technical and resource-based perspectives. This included considering our current toolset and any additional resources that may be required to bring an idea to fruition. Access to high-performance computing resources has emerged as a critical consideration, as the computational demands of the advance LLMs projects currently exceed our available resources. Given this, we are actively exploring options like using gaming GPUs, such as the RTX 4060.

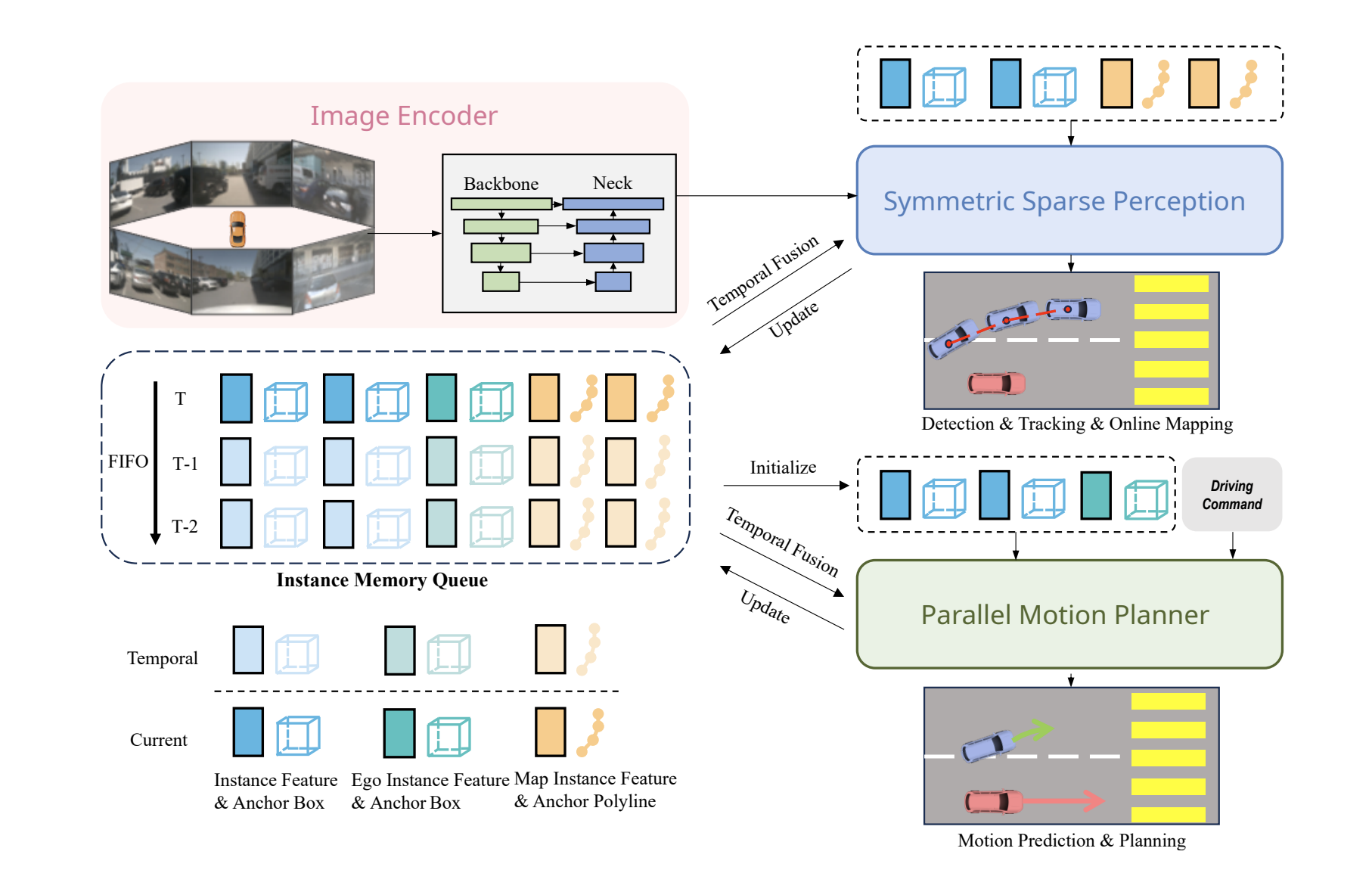

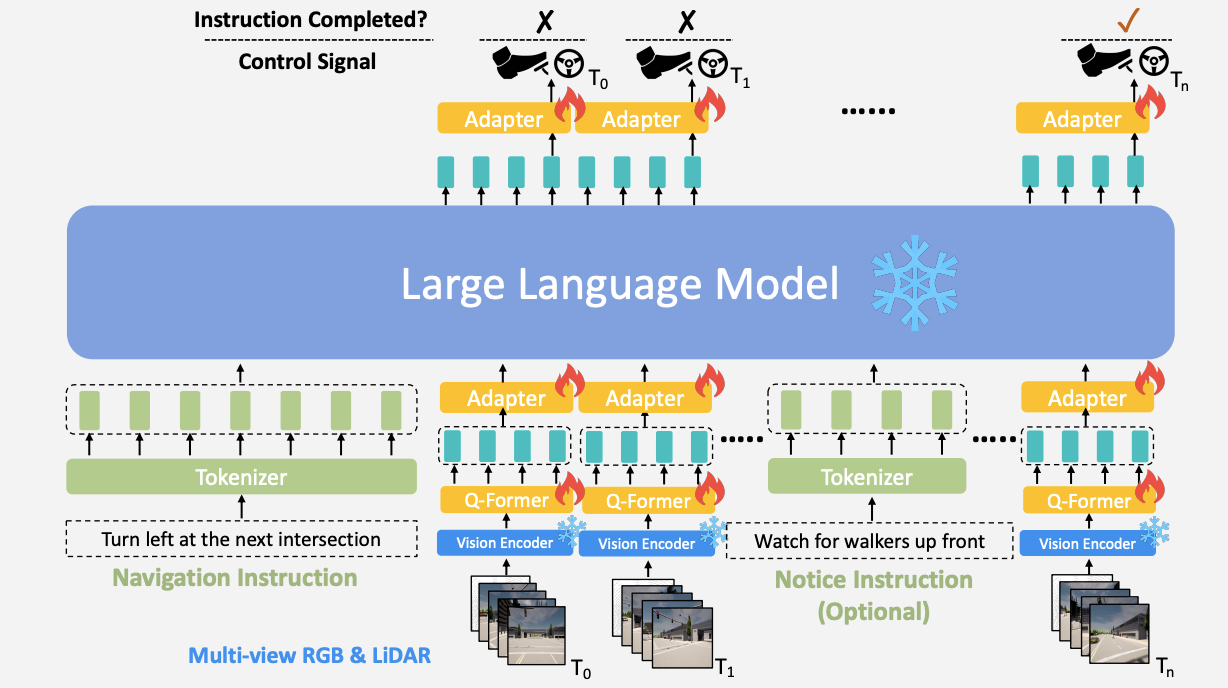

In autonomous driving model design, different architectures significantly impact GPU resource utilization. In this discussion, we use SparseDrive and LMDrive as examples to see these trade-offs in GPU resource utilization.

The SparseDrive model achieves computational efficiency through a sparse representation framework, which minimizes reliance on dense bird’s-eye view (BEV) features, thereby reducing resource consumption, particularly in multi-GPU setups

In contrast, LMDrive’s

Training LMDrive requires approximately 4-6 days on 8 A100 GPUs with 80GB memory and consists of two stages: vision encoder pre-training and instruction fine-tuning, as outlined in their documentation. LMDrive’s large parameter count, coupled with the need for real-time closed-loop processing, imposes a substantial load on GPU memory; however, it achieves robustness in language-guided navigation and adaptive control.

Action Items

To carry forward the selected ideas, we outlined specific action items. These steps are critical to ensuring that our top-priority ideas move steadily through the development pipeline. The key tasks include:

- Idea Selection and Planning: Publishing the complete ideas list from this meeting and in further discussions will allow us to finalize one or two core ideas that the can focus on developing.

- Feasibility Research: Comprehensive research into the technical feasibility of the selected ideas will enable us to identify specific tools, frameworks, and methodologies required for future successful implementation.