Coding week15&16 9/09-9/22

This week, we focused on exploring new ideas for enhancing autonomous vehicle capabilities with LLMs language instructions. Below is a summary of the primary ideas we considered, aiming to address current limitations and expand on innovative interaction methods between passengers and self-driving systems.

This week, we focused on exploring new ideas for enhancing autonomous vehicle capabilities with LLMs language instructions.

-

Idea Selection and Planning: Sharing the complete list of ideas from this meeting and subsequent discussions will allow us to identify and finalize one or two core directions.

-

Feasibility Research: We will also conduct feasibility studies on the selected ideas to explore technical challenges and identifying necessary tools, frameworks, and methodologies. This research will be crucial in ensuring that the chosen solutions are practically implementable for future development.

Below is a summary of the primary ideas explored.

Driving with Natural Language

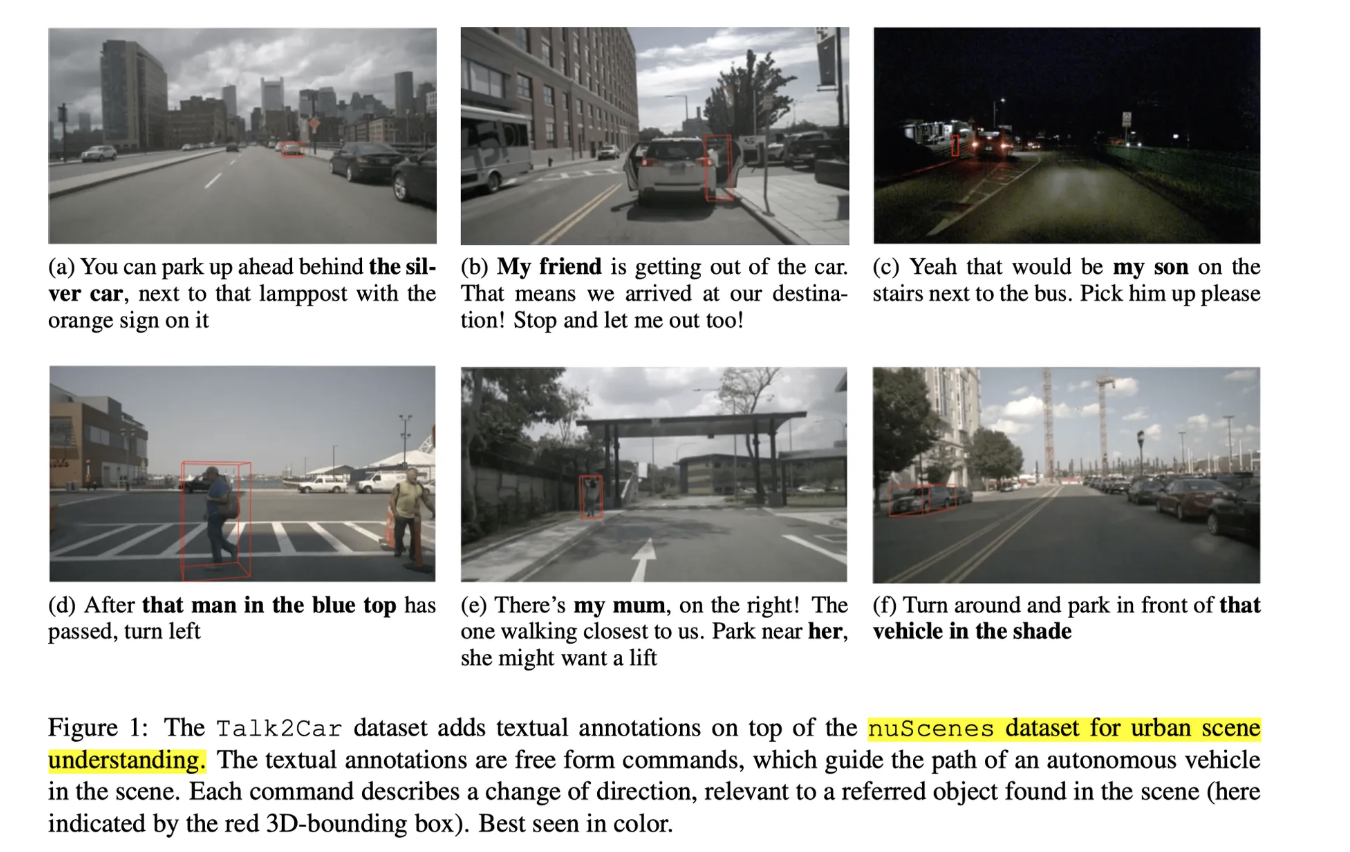

Talk2Car: Taking Control of Your Self-Driving Car

We began by exploring potential limitations from the early stages of the work.

-

Motivation: Autonomous vehicles may hesitate in some complex traffic scenarios, especially when roads are congested or special situations arise. If passengers can provide suggestions or instructions through natural language commands, such as asking the vehicle to stop or wait, it can help the vehicle make quicker decisions.

-

Limited to Object Reference Tasks: The core issue of the article is to map the passenger’s natural language commands to specific objects in the visual scene. This “object reference” task mainly aims to enable autonomous vehicles to identify specific objects mentioned in passenger instructions, without involving direct vehicle control or executing route planning. Therefore, the article focuses solely on “recognizing reference objects”.

-

Not Involve Real Control: Although the article discusses the motivation for allowing passengers to interact with autonomous vehicles through natural language, the current research is limited to recognizing objects indicated by passengers through natural language recognition and not actually executing these instructions (e.g., stopping, turning, etc.).

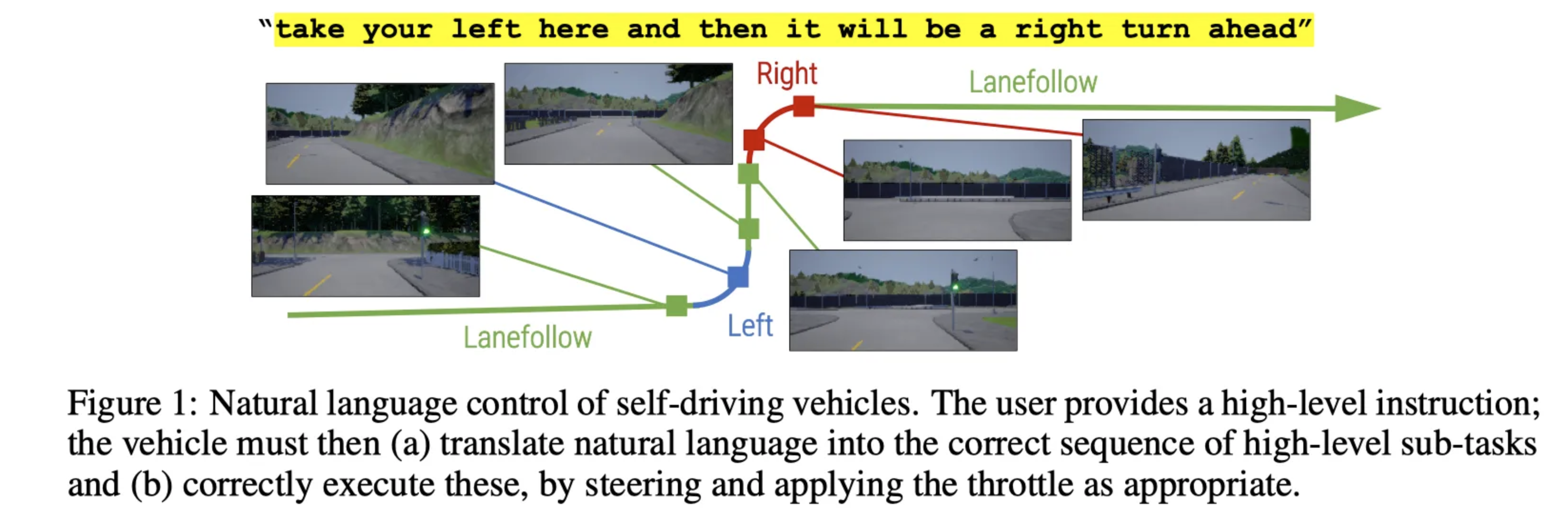

Conditional Driving from Natural Language Instructions

Previous work primarily focus on path planning and navigation systems. However, as human-machine interaction evolves, we realize that human expectations for autonomous driving systems extend beyond simple navigation commands to a more “coaching” role in interactions. In such interactions, autonomous driving systems need not only basic path planning capabilities but also the ability to understand complex, multi-turn instructions, demonstrate keen environmental insights, and effectively handle edge or hazardous scenarios. This motivation from this paper has driven the proposal and exploration of the following research directions.

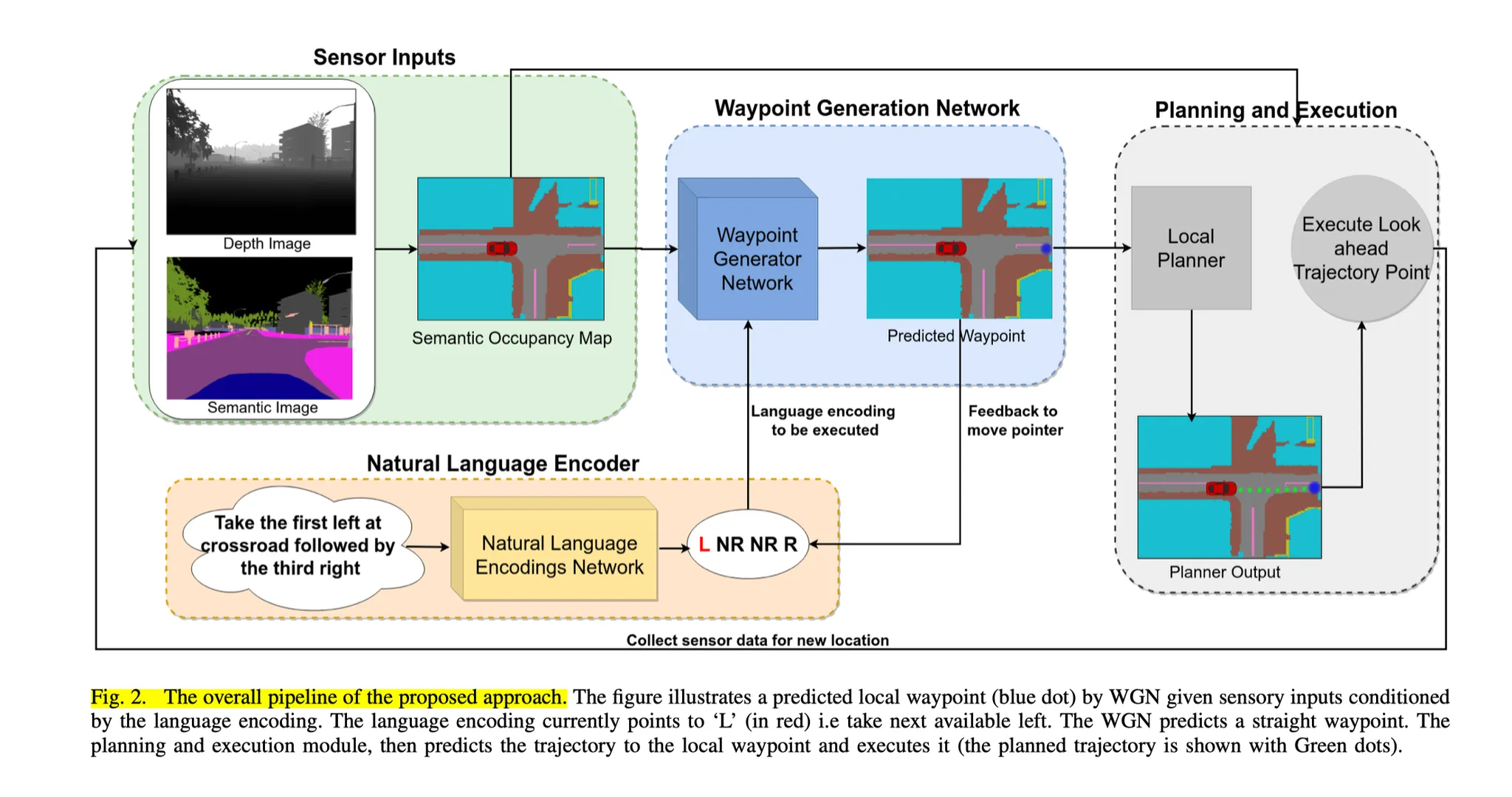

Talk to the Vehicle: Language-Conditioned Autonomous Navigation of Self-Driving Cars

The motivation for the article is based on the idea that in many complex road scenarios, traditional autonomous driving technology relies on pre-generated detailed maps and precise positioning systems. When these conditions are not met, such as when maps are inaccurate or GPS delays occur, vehicles may fail to navigate correctly.

Human navigation relies not on precise map data but on the semantic understanding of language and the current environment. By adopting this method, autonomous vehicles can navigate through semantic understanding and natural language instructions without relying on detailed offline maps.

By introducing natural language instructions, vehicles can complete navigation tasks without accurate maps or positioning information. This reduces the dependence on external maps and GPS accuracy. This would make autonomous driving technology more robust and adaptable, especially in uncontrollable outdoor environments.

Therefore, the research motivation is to explore how to enhance the navigation capabilities of autonomous vehicles through natural language instructions. This approach can not only improve the efficiency of autonomous driving but also allow autonomous vehicles to integrate more naturally into human driving behaviors, especially in the face of GPS positioning errors or the absence of pre-mapped maps.

The primary inspiration for this paper is that, in real life, human drivers can easily complete navigation tasks with simple language instructions (such as “turn right at the traffic light, then turn left at the second intersection”) even without precise maps.

The framework proposed in the article has three core modules:

- NLE: Transforms natural language instructions into high-level machine-readable encodings.

- Waypoint Generation Network (WGN): Combines local semantic structure with language encoding to predict local waypoints.

- Generates obstacle-avoidance trajectories based on predicted waypoints, executed by the low-level controller.

- By combining natural language with visual and semantic maps, vehicles can generate waypoints adapted to the current environment, and this method avoids dependence on detailed maps and precise positioning. Each time local waypoints are generated, WGN considers the language instructions and the vehicle’s current environmental information.

Long-Turn Interaction

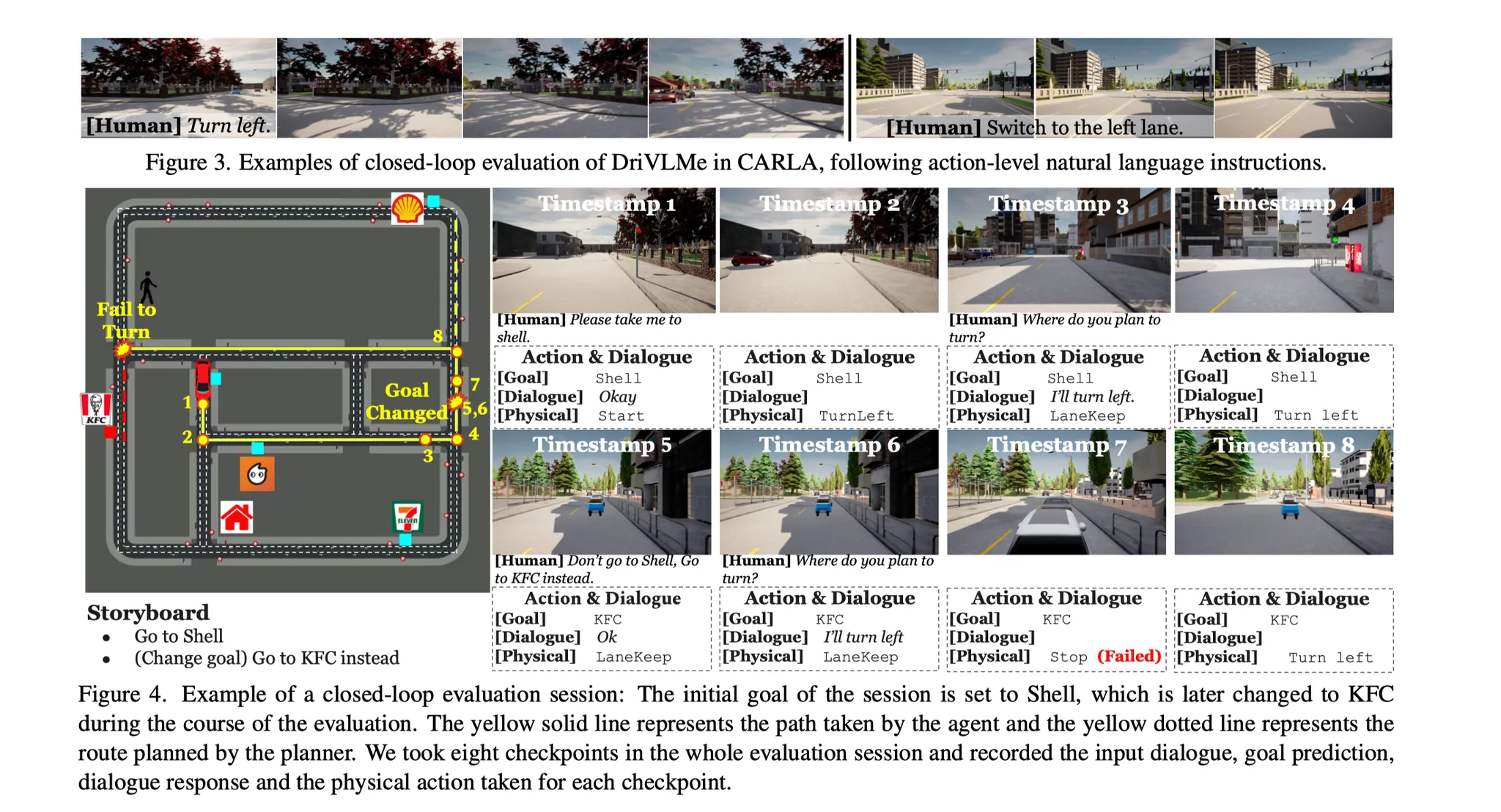

DriVLMe: Enhancing LLM-based Autonomous Driving Agents with Embodied and Social Experiences

The motivation of the article is to address the limitations of existing autonomous driving technologies in actual complex driving scenarios, especially the problems of long-duration navigation tasks and free dialogue interactions. Although current foundational models (FMs) have shown the potential to handle short-term tasks, they still face challenges when dealing with environmental dynamics, task changes, or long-term human-vehicle interactions:

Existing autonomous driving systems are proficient at executing simple, short-term tasks like turning or overtaking but fall short when it comes to understanding broader, goal-oriented tasks that involve route planning and map knowledge. Additionally, these systems struggle to handle unexpected situations that arise from sensor limitations, environmental changes, or shifts in task requirements. Traditional systems are also limited in managing natural language dialogue, making it difficult for them to engage in complex, multi-turn interactions with passengers, especially in dynamic and evolving environments where continuous context understanding and appropriate responses are essential.

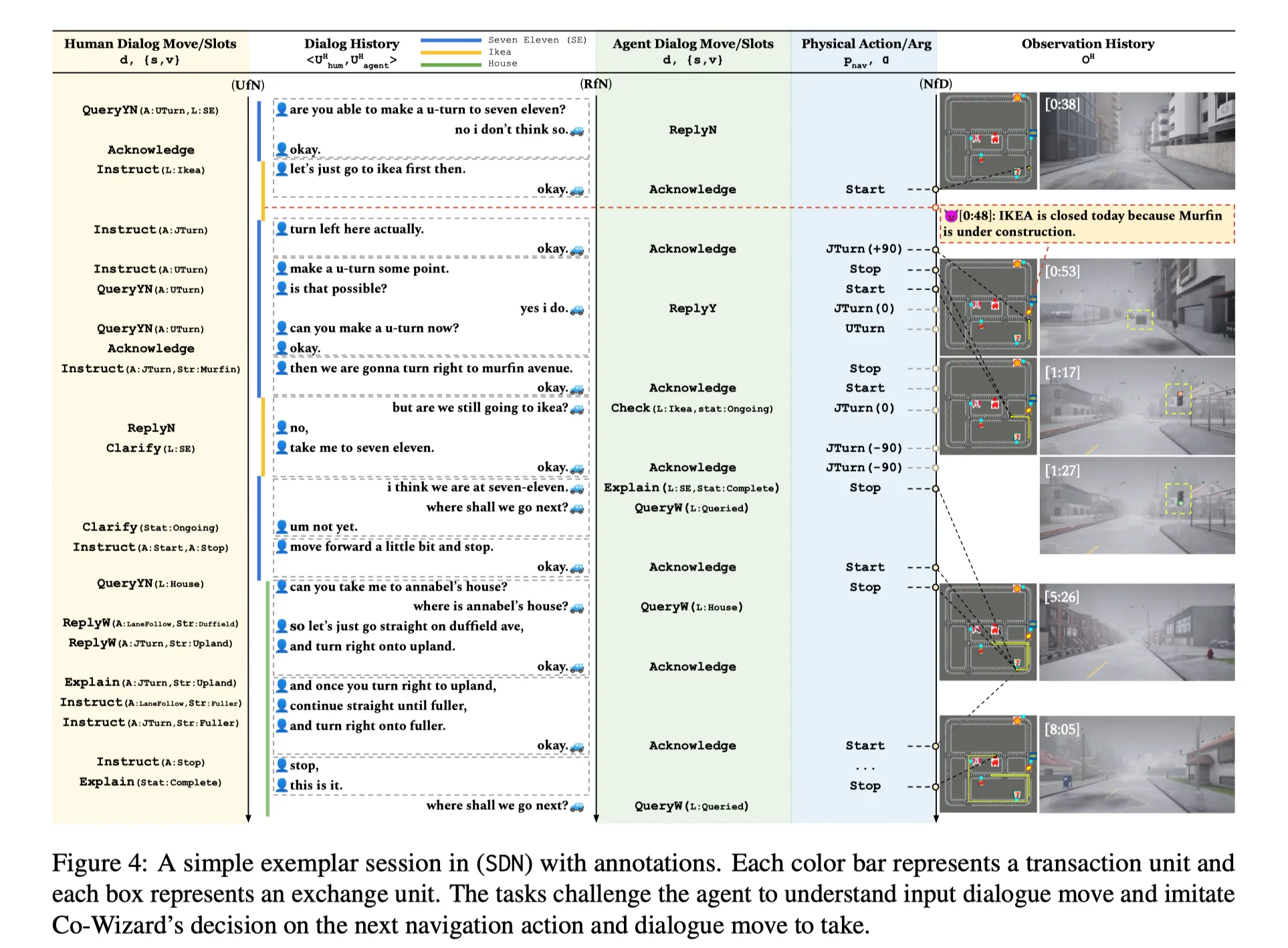

DOROTHIE: Spoken Dialogue for Handling Unexpected Situations in Interactive Autonomous Driving Agents

Motivation: User interaction with autonomous driving systems is often multi-turn, involving complex, long-duration instruction processing. To achieve this, the system must have the capability to handle long-term instructions, such as mapless navigation (Mapless Navigation) and following complex instructions (Multi-turn Interactions, Long Horizon Instructions). Traditional navigation systems only need to provide a path and execute it, but the new interaction mode requires the system to flexibly adapt to new instructions, even dynamically adjusting paths and goals during the task process.

Potential Research Directions

-

Handling Ambiguity in Language Instructions: Language often contains vague or uncertain expressions, like “go forward a bit, then turn right.” Without adequate context-understanding, such vague instructions may lead to navigation errors.

- Potential research direction: Employing advanced language models (e.g., GPT series) that are better equipped to interpret ambiguous language, handle diverse expressions, and reason based on context.

-

Semantic Understanding of Environment:

The current system uses a waypoint generation network that combines semantic information and language encoding to produce local waypoints. However, this integration is still “static.”

- Challenges:

- The system assumes that combining language encoding with the current semantic map suffices, but real-world scenarios often involve mismatches between environmental perception and language instructions. For instance, there could be multiple “left turns,” making it unclear which one to follow.

- While LLMs can handle complex multimodal data, the current system lacks the deeper interaction and reasoning needed to optimally use environmental and language information together.

- Challenges:

-

Lack of Long-Term Understanding:

Although the system’s local planning approach offers flexibility, it lacks a global perspective. Only planning local waypoints can lead to that local planning may not always find the most efficient path, potentially leading to detours or longer routes.

-

Inadequate Exception Handling Capabilities:

The system’s reliance on real-time sensor input and waypoint updates can make it vulnerable to unexpected situations if sensor data fails or feedback is inaccurate.

- Challenges:

- The current system might fail to respond effectively to emergencies, such as unexpected obstacles or environmental changes.

- Although LLMs provide robust reasoning and language processing, integrating these models with dynamic environmental feedback remains limited.

- Potential research direction: Investigating RL or feedback mechanisms based on historical data to help the system adjust autonomously during emergencies and dynamically optimize its path. Additionally, integrating LLMs with sensor feedback could enhance response to complex conditions.

- Challenges:

Advanced Frameworks

-

World Model Based on Real Data Research increasingly focuses on using real data to train systems for better environmental cognition, enabling systems not only to process immediate perceptual data but also to predict and anticipate complex traffic situations. This model aids in handling variable traffic scenarios, especially in dynamically changing or uncertain environments

. -

Language Generation and Embodied Experiences Language generation should go beyond preset corpora by incorporating embodied experiences. Autonomous driving systems can benefit from extracting and interpreting information from the environment and translating it into natural language feedback. In complex traffic situations, the system could assess road conditions in real-time and communicate this to users.