Week 5: June 30 ~ July 06

Preliminaries

In Week 5 of my GSoC journey with RoboticsAcademy, I focused on preparing data for training an autonomous driving model. The goal was to enable a deep learning-based F1 car to follow a track by learning from real movement data in a simulated environment.

To achieve this, I began collecting sample datasets from the Follow-Line circuits provided within the RoboticsAcademy simulations. These datasets will later be used to train a model that takes images and motion parameters (linear (v) and angular velocity (w)) as inputs to learn control behavior.

This dataset collection phase is crucial for building an end-to-end autonomous driving exercise that integrates vision and control using deep learning.

Objectives

- Capture synchronized camera images, linear velocity (V), and angular velocity (W) as the F1 car navigates the circuits

- Collects data in an organized way

- Cropped the collected images to focus on the road area for training

Execution

Types of Follow-Line Circuits

RoboticsAcademy provides a variety of circuits to simulate line-following behavior under different levels of difficulty and complexity.

There are a total of eight circuits used in line-following exercises. Four of these circuits are designed for general-purpose line-following, while the other four are specifically intended for Ackermann steering vehicles.

Line-Following Circuits

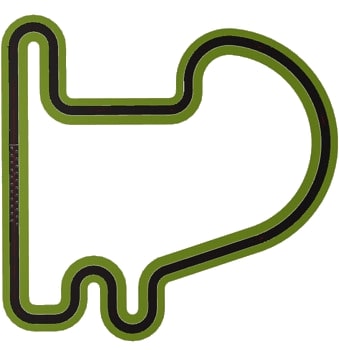

Simple Circuit

Simple Circuit

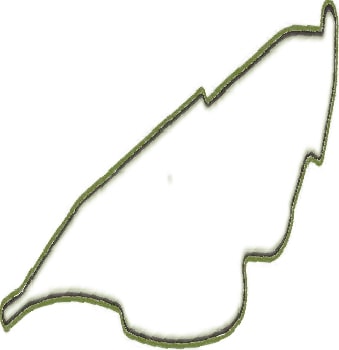

Montreal Circuit

Montreal Circuit

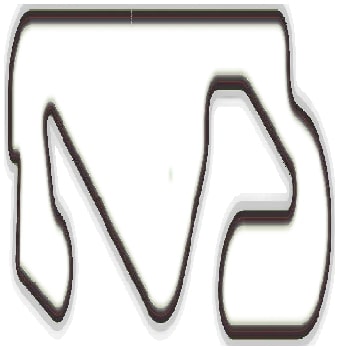

Montmelo Circuit

Montmelo Circuit

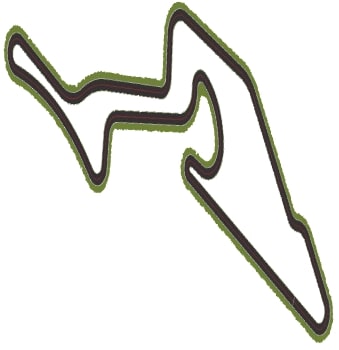

Numburgring Circuit

Numburgring Circuit

Data Collection Workflow

To generate training data for the deep learning-based line-following model, I ran the Follow-Line exercises in RoboticsAcademy using a custom Python script. This script was designed to:

- Capture camera images from the simulation in real-time.

- Log the associated control commands linear velocity (V) and angular velocity (W) for each image frame using PID .

-

Save the data in a structured format:

-

Images were saved in a directory (e.g.,

data/images/). - Metadata (image path, V, W) was logged in a CSV file.

-

Images were saved in a directory (e.g.,

Since the RoboticsAcademy exercises run inside a Docker container, all collected data was initially stored within the container's filesystem. After the collection process,I accessed the container’s storage and Copied the entire dataset (images + CSV) from the container to my local machine for further preprocessing and model training. This workflow allowed me to automate the data collection process while keeping the development environment isolated and reproducible.

Data Collection Summary

I ran a single loop for each circuit. I collected a total of 84,969 images from various follow-line circuits using the simulated follow-line exercise in RoboticsAcademy. Each image was captured along with the corresponding control commands— linear velocity (V) and angular velocity (W)—to support supervised learning for autonomous navigation. The images were captured at a resolution of 640x480 pixels, providing a clear view of the road and surrounding environment.

To keep the dataset well-organized and easy to use for training deep learning models, I stored the data in a single CSV file. Each row in the CSV contains:

-

Image path (

images/12345.png) - Linear velocity (V) – representing the forward speed of the car

- Angular velocity (W) – representing the steering angle or rotational speed

Image Preprocessing: Cropping for Region of Interest

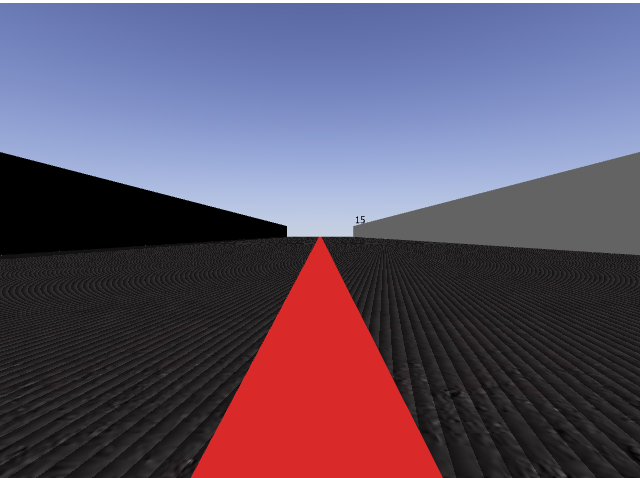

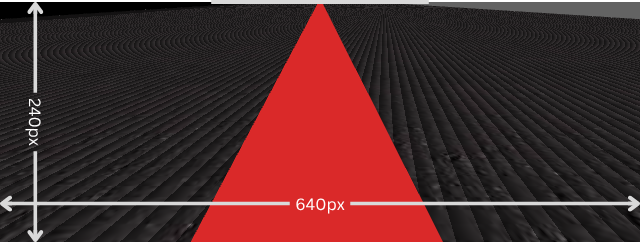

To prepare the dataset for training, I performed a cropping operation on each captured image. The original images were of size 640×480 pixels, which included irrelevant portions such as the sky and background.

A research paper shared by my mentors employed the same image cropping technique, which further validated the effectiveness of the approach used in this dataset.

To focus the model on the road and track area (red line), I cropped the images to a size of 640×240 pixels, removing the top section that primarily contained the sky/background. This ensured that the input to the model highlights the most relevant visual information for line following, improving both training efficiency and model accuracy.

Original image and Cropped image

Original image

Original image

Cropped image

Cropped image

References

[2] [paper] Imitation Learning for vision based Autonomous Driving with Ackermann cars

[3] PID Controller

Enjoy Reading This Article?

Here are some more articles you might like to read next: