Week 4 (Jun 23 - Jul 3)

Meeting with Mentors

- This week’s meeting began with an update on my progress. I shared details about the

DetectionMetricsFactoryclass I created. David suggested that a common base class could be used, from which bothSegmentationMetricsFactoryandDetectionMetricsFactorycan be derived. - We discussed whether to use Streamlit or Gradio for the UI. The consensus was that the choice depends on our end goal. Sergio clarified that the goal is to build a full application (not just a demo), so we decided to proceed with Streamlit. This will allow us to support future UI advancements more easily.

- I asked the mentors about their idea for the UI design. They shared details and references from the UI used in v1, which I will use as inspiration.

- Finally, I discussed an error I encountered while implementing the example notebook. The issue was due to an improperly defined ontology translation. David provided helpful suggestions on how to define the ontology correctly.

To Do for This Week

- Test and fix all issues in the code developed so far.

- (Partially done for small dataset) Have a working example for DetectionMetrics using COCO and a Torch model.

- Design the basic UI and get feedback from mentors.

- Get familiar with Streamlit to kickstart UI development.

Progress

- I started by working on the example notebook for detection metrics. The

KeyErrorI faced last week was due to ontology translation issues and because the model’s integer output wasn’t converted to a string before being used as a key. This is now resolved. - I restructured

coco.pyandtorch_detection.pyfurther after comparing them to the segmentation pipeline, aiming for consistency between both pipelines. -

The example notebook now runs fine. However, when loading larger datasets, I encountered the error:

'[srcBuf length] > 0 INTERNAL ASSERT FAILED at "/Users/runner/work/pytorch/pytorch/pytorch/aten/src/ATen/native/mps/OperationUtils.mm":341, please report a bug to PyTorch. Placeholder tensor is empty!'I tried to debug this by ensuring empty images aren’t loaded into the model, but the error persisted. I’m unsure if this is a model or MPS issue. Further investigation was blocked by kernel crashes. Resolving these MPS issues is still pending, but I was able to run the notebook and get results for a small dataset of 5 images.

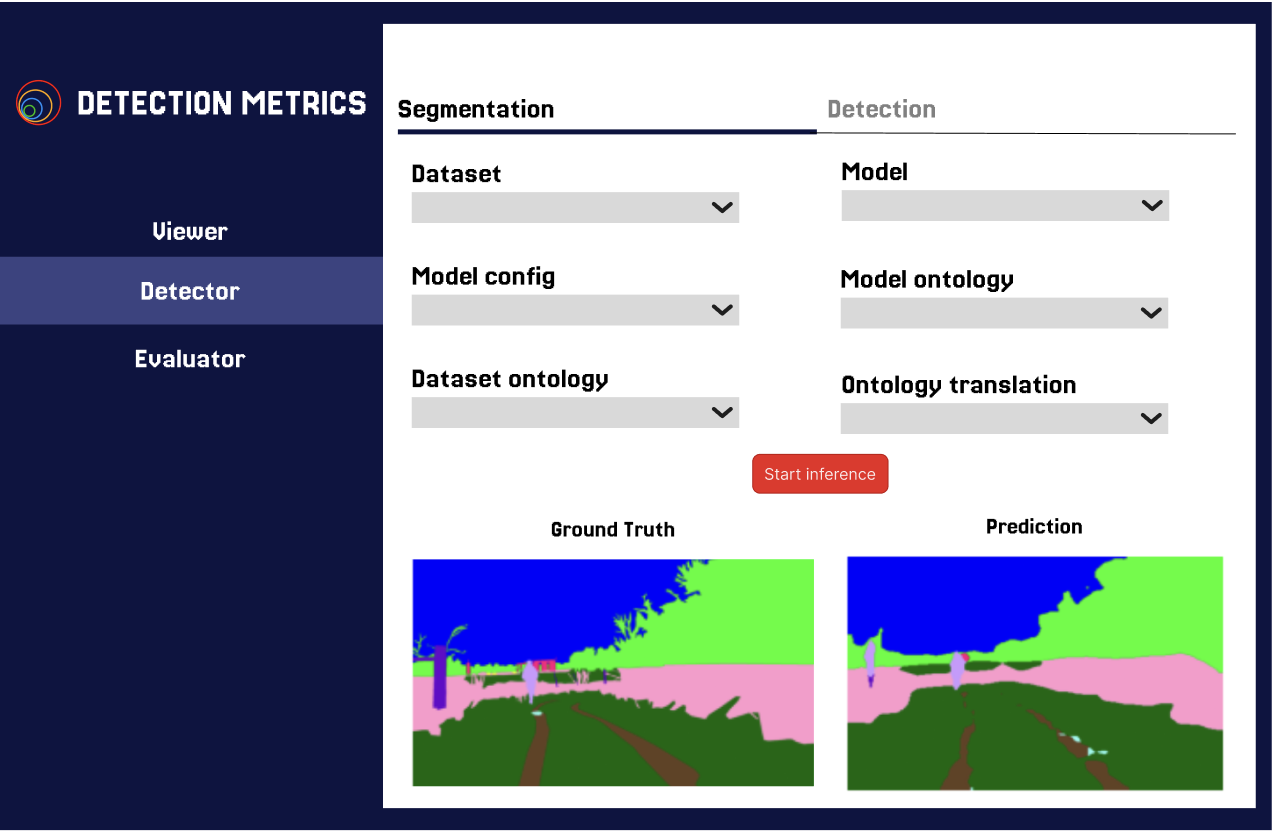

- Next, I explored UI design. I started by building a Figma design for the UI: DetectionMetrics Figma

- I thought having Viewer, Detector, and Evaluator tabs in the sidebar would be relevant (similar to previous versions). Each tab will support both segmentation and detection. In Viewer, users can upload datasets and view sample images with ground truth masks/bounding boxes. In Detector, users can get inference for segmentation/detection. In Evaluator, all metrics will be displayed in the UI.

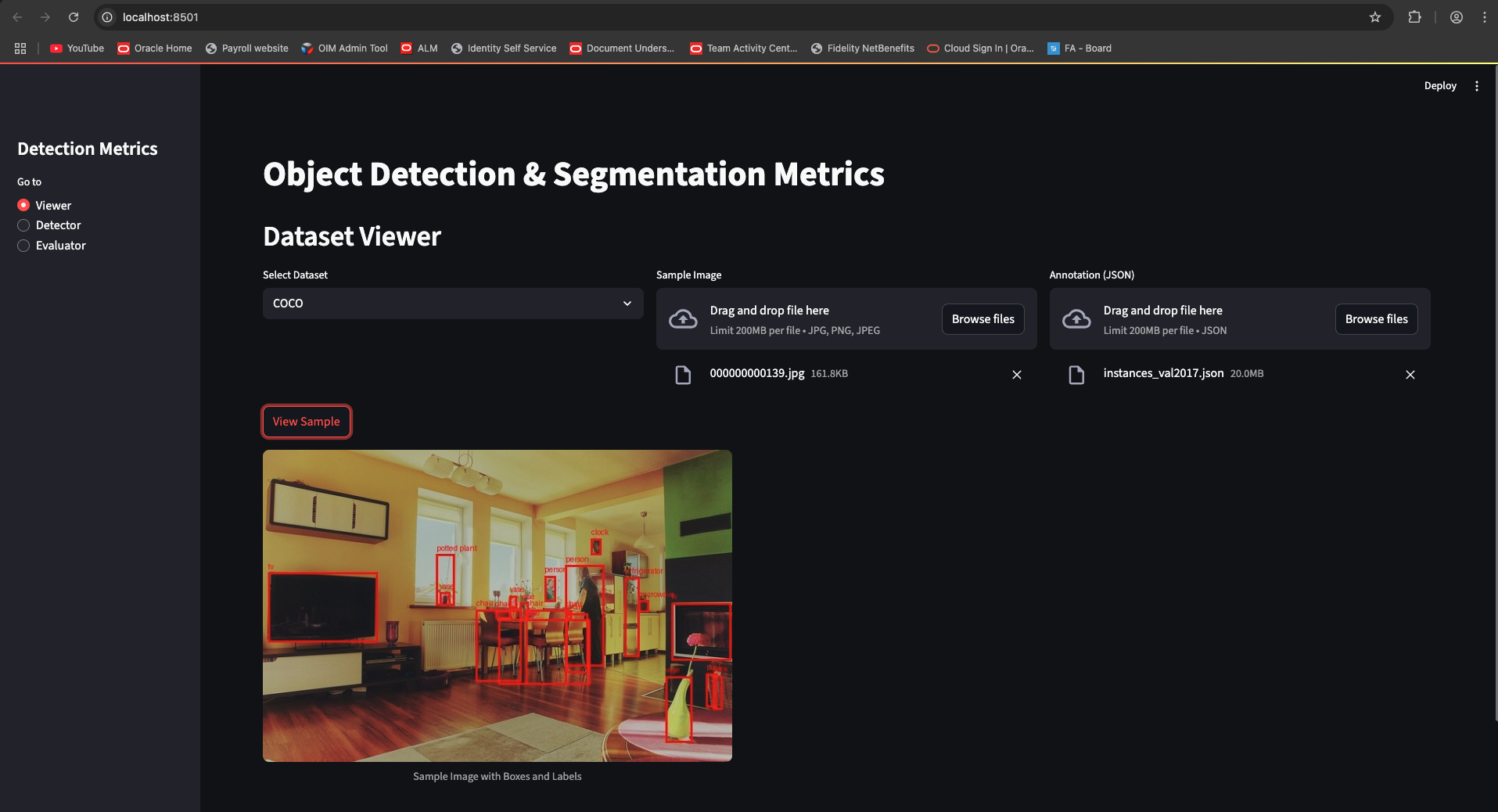

- I then began learning how Streamlit works. I created a demo app:

streamlit_demo.py. The app features three sections in the sidebar. In the dataset tab, users can upload a sample image and select the annotation. On clicking View Sample, the image with bounding boxes is displayed in the UI.

What’s Next?

My main goal for next week is to get the draft PR merged. I will thoroughly test and fix all bugs, and clean up the codebase before doing that. And I also plan to make significant progress on the UI and get the basic implementation done.

Enjoy Reading This Article?

Here are some more articles you might like to read next: