Week 10

in Blog

GSOC Coding Week 10 Progress Report

Week 10: Building the PERCEPTION API and Refining Filters

This week focused on stabilizing the perception system and creating a clean interface for interacting with the PCL filter server in ROS2. After the initial migration and fixes from the past two weeks, the goal was to make perception reliable and modular.

What I Did This Week

- Developed a new PERCEPTION API to handle all perception-related functions.

- This API communicates directly with

pcl_filter_server.cppto call the color filter, shape filter, and reset functions. - It provides a cleaner and more modular way to manage perception tasks in ROS2.

- This API communicates directly with

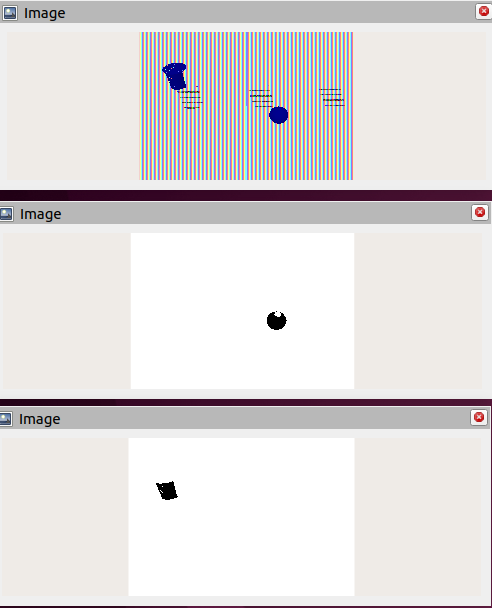

Refined the color and shape filters for better stability in the ROS2 environment.

- Conducted experiments to test how the position of objects affects detection accuracy.

Problems I Encountered

Shape filter instability: the issue from last week persisted, where the system sometimes recognized a sphere as a cylinder.

Blue detection issue: occasionally, the system failed to detect the blue sphere even when all other objects were filtered correctly.

Solutions Implemented

- Improved shape detection: updated the

void detect_cylinderandvoid detect_spherefunctions inpcl_filter_server.cppto make them more reliable.

- Camera setup adjustment: since detection accuracy is also influenced by camera position, I experimented with several positions and angles.

- The old exercise already had limited camera placement options, so I modified the object size and placement to bring them closer to the camera.

- This significantly improved detection stability.

Conclusion

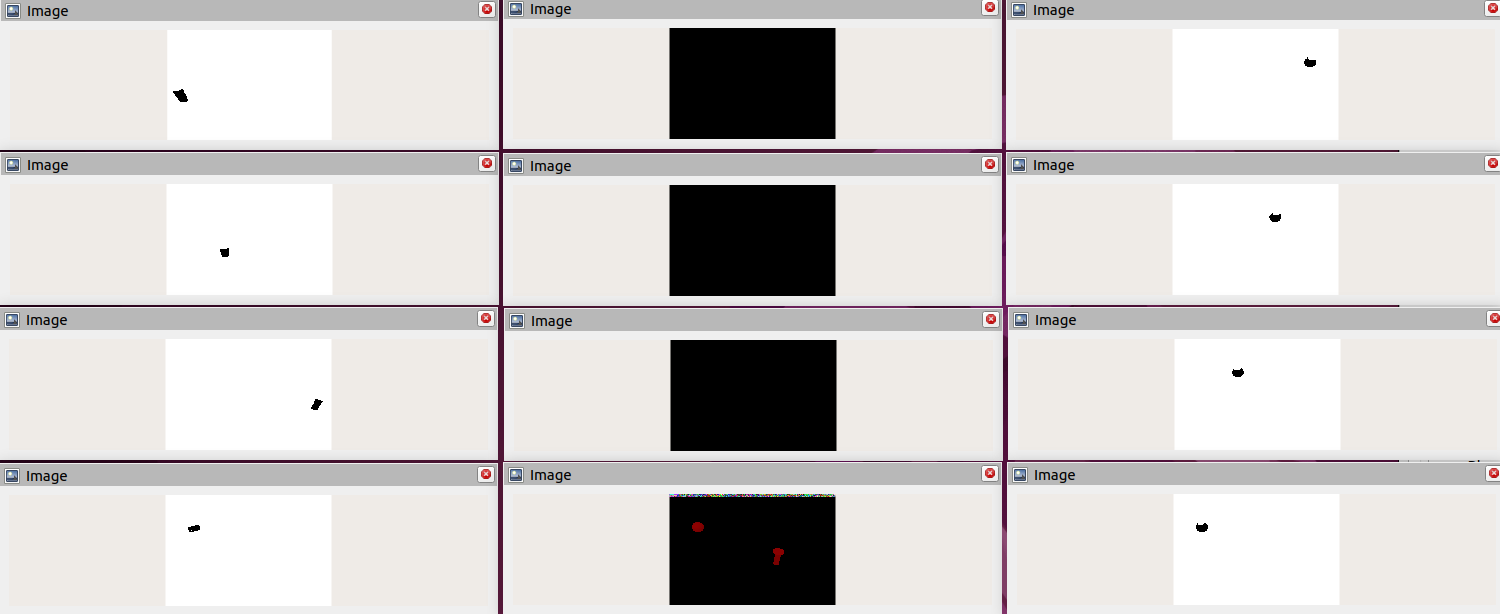

PCL Filter Stability Demo

After two weeks of focused work, the pcl_filter_server.cpp is now stable and functional in ROS2.

- The PERCEPTION API provides a modular way to interact with the perception system.

- Both color and shape filters perform reliably, and adjustments to object placement have improved detection consistency.

With a robust perception pipeline in place, the next phase will be to connect these outputs directly into the motion planning workflow for pick-and-place execution.

For Week 11, I plan to:

- Testing the HAL api for manipulation

- Begin connecting perception outputs to motion planning for pick-and-place execution

📍 Posted from Barcelona, Spain

🧠 Project: Migration and Enhancement of Machine Vision Exercise to ROS2 + MoveIt2