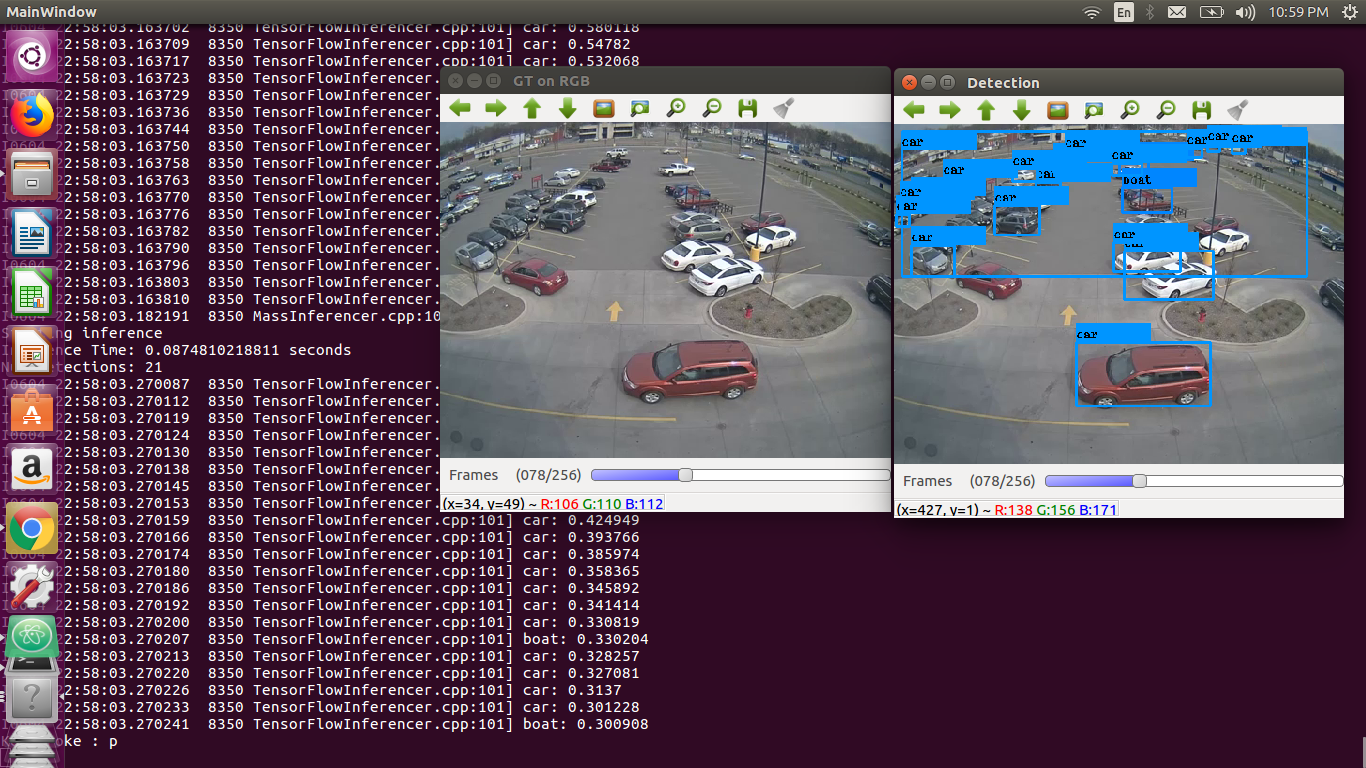

Playback options for DetectionSuite

Week 1: Adding Playback functionalities to DetectionSuite

- Task 1: Done implementing pause functionality

- Task 2: Done adding trackbar

- Task 3: Done adding playback rates

- Task 4: Finished week1’s proposed tasks

New Features!

- You have deployed your precious weights on a video and are waiting for it to detect something inportant to you (may be some special “class”), and it gets detected, Now if you are very happy and want to freeze that moment for eternity, you could do that using the new pause functionality.

- If you are deploying it on a really boring video but you cannot risk missing any of it, A good news, you could now increase your playback speed so that you could watch the inferenced video at 1.5x/2x or even higher rates !

-

Now suppose that you are drinking hot coffee and spilled it bymistake , in the process you missed the crucial part of your video which you are inferencing , No worries you can noww slide backward and watch it again!!

As described in the proposal ,the first week of my GSOC was dedicated to adding new playback functionalities mentioned above like pause , playback rates , and trackbar to the DetectionSuite. They have been implemented in the form of class definitions in playback.hpp it’s implementations in playback.cpp.

Work Distribution

Starting 1-2 days were spent searching different available

methods on how to implement these features i.e. whether to

integrate the deployer withint the suite by making space for it

or to leave it as is and add the feature to the OpenCV window

or create a new Qt window that would show this. Due to too much

enthusiasm in the early stage I thought of going with the last

option of create a new Qt window and display it there.Then I

spent a day on learning Qt. But only while going through the

tutorials I understood that this is not something which is done

overnight and I should stick to something which I can pull of

in time and also so that I can go through more Qt tutorials

while working as most of my Week 2 is based on it. Then it took

a day and half on creating and experimenting the playback

header files on a simple video to check how it is funcitoning.

Everything was working fine until the Integration Day (when i

was required to integrate my header file with suite) came. I

could not even compile my code as I was adding only the header

file of the new functionalities in the CMakeList.txt. I got so

frustrated due to continous compile fails that I was

overlooking that part evertime I was checking the cmake file.

Thanks to Sergio I cooled down a bit and after spending more

than three hours on that I realized where I went wrong. After

that again experimentation started as to where I should include

my header file and call functions. And luckily I was able to

pull this off before my meeting with mentors.

/colab-gsoc2019-Jeevan_Kumar

Results

The video for the same can be found here.

The video for the same can be found here.

Upcoming work

After discussing with mentors , few changes were proposed by them, like to stop inferencing when paused, add playback functionalities to the ground truth aswell and sync them both and the already planned week 2.