Final Report

Summary

JdeRobot provides a set of tools for developing robotic applications, including previous work in autonomous driving robots that use algorithms like a classification neural networks or regression neural networks, Therefore one of the main goals of this project is to combine OpenAI Gym and Gazebo to use Reinforcement learning in the current Behavior Studio setup. This involved to migrate previous code to work with python3.

During this project I extended the support of Jderobot’s behavior studio to ROS-noetic, and upgraded previous gym gazebo library to work accordingly to the new version of python3 and ubuntu 20.04. Moreover, I adapted the current GUI to work with Reinforcement Learning algorithms.

Contributions

One of my early contribution was to make a sort of template to run Q-learning algorithm with OpenAI gym environments, like cartpole and breakout. For this task I created and documented a docker container to ease the reproducibility of the experiments, the containers were tested using GPUs in a notebook which later was modularized in python scripts.

Behavior Studio at first had support for python 2.7, and the ROS-noetic which had support for python3 was released recently it presented a great opportunity to migrate the project to python3 and use ROS-noetic directly.

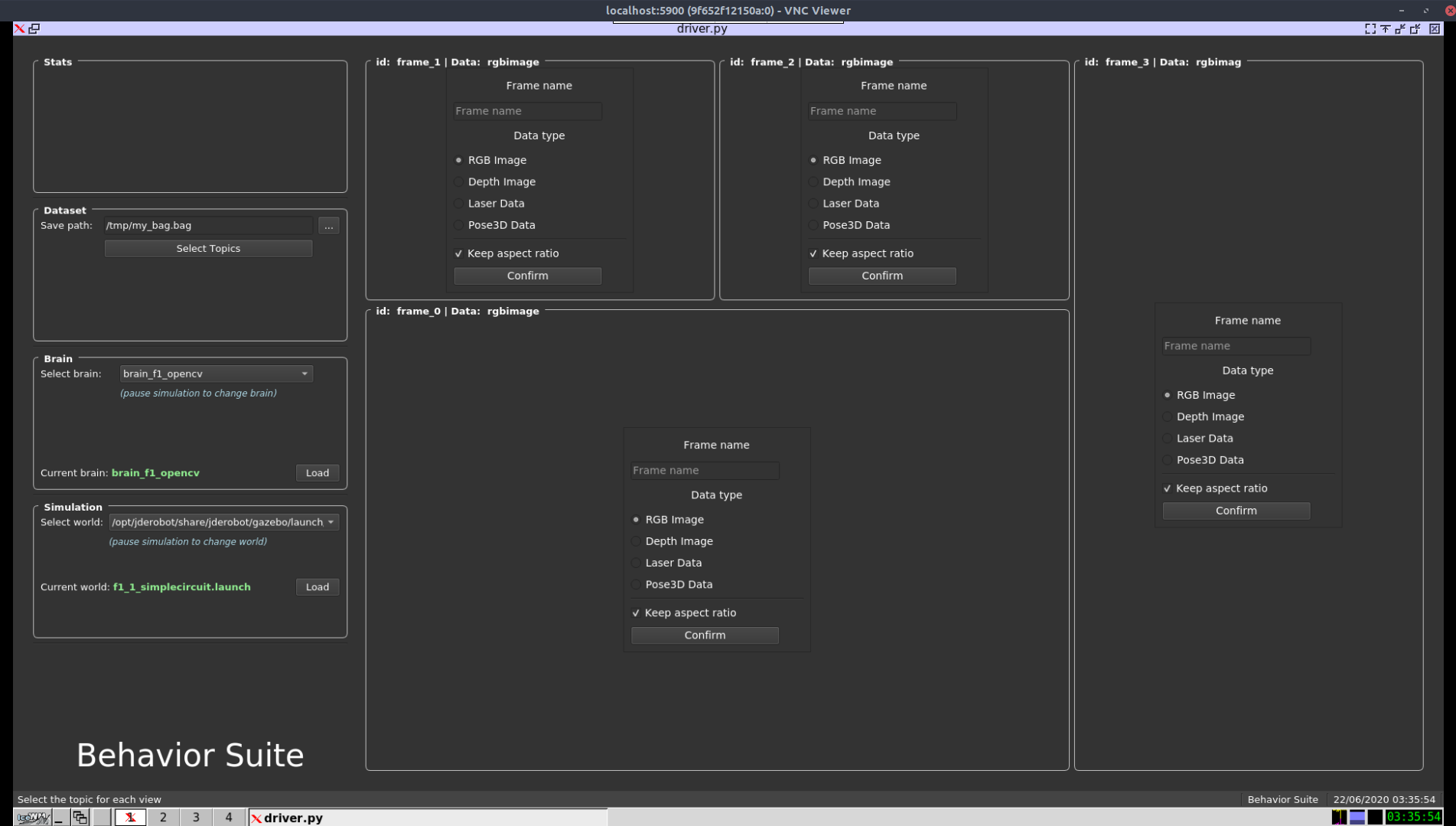

I completed the refactoring to extend the support for python3 and tested it with the behavior studio GUI as is shown in the following figurem which displays the behavior studio GUI within the docker container through the VNC viewer.

When the project began the Gym-Gazebo library only worked for ubuntu 18.04 and ROS-melodic, hence more refactoring was done to migrate this library to Ubuntu Focal Fossa with ROS-noetic due that this library is a key part in order to enable the reinforcement learning experimentation within Behavior Studio.

Since the project was growing in dependencies it was necessary to make this available for others to use through the Jderobot’s docker hub, multiple images were created and maintained as new changes were added to the project. I added the respective guides and tutorials to use the docker images and also I posted the user manual including the installation steps for the ROS-noetic behavior studio.

As Ubuntu 20.04 support gazebo 11, formula 1 plugins were migrated to support this new version, which were added to the CustomRobot repository. although later on was decided to try to mostly use official ROS-plugins.

After the migration of the gym-gazebo library, behavior studio and other dependencies was done, I had the path clear to implement the Reinforcement learning support in behavior studio considering that it only worked with Brains with deep learning or opencv.

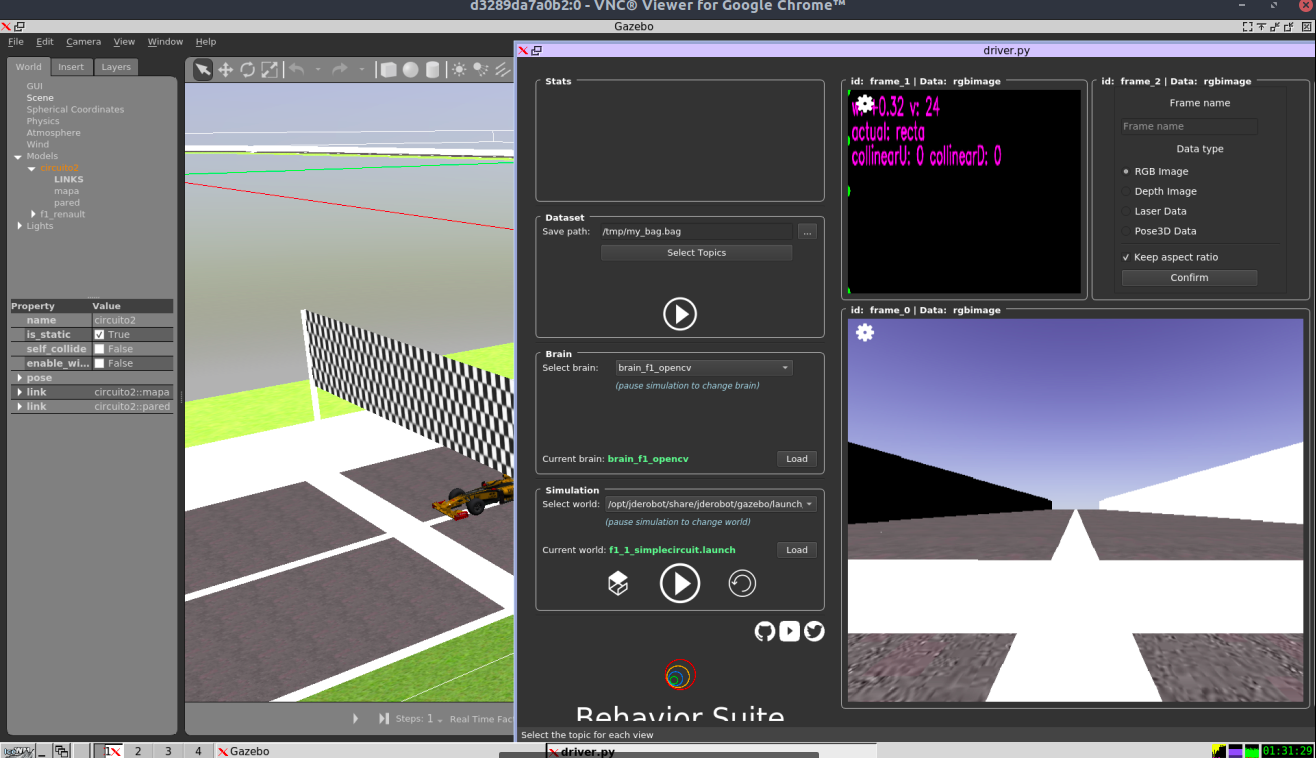

In the last weeks the Behavior Studio GUI supported RL brains, using q-learning in the formula 1 circuit, here is a video how the training looks like inside a container which allow to do training in headless remote servers, using different host opearting systems.

It was also added more documentation about the how the brains work and how one could setup different training parameters from the launch file that the Behavior studio GUI consumes.

BrainPath: 'brains/f1rl/train.py'

Type: 'f1rl'

Parameters:

action_set: 'simple'

gazebo_positions_set: 'pista_simple'

alpha: 0.2

gamma: 0.9

epsilon: 0.99

total_episodes: 20000

epsilon_discount: 0.9986

env: 'camera'

Difficulties

Most difficulties happen because I was not related to some existing code or I was not sure which approach to take to solve a problem since there were many options.

Moreover there were other difficulties related to the project itself, like some unexpected crashes, and code compatibility. This project needs a set of requirements that ensure the correct functionality of the behavior studio tool to mitigate this I develop a set of containers that already include those requirements that also helps new users to easily begin using this tool.

Improved skills

Working in this project helped me learn new skills, and reinforce previous concepts. This was a great opportunity to delve into new problems which gave the opportunity to grasp new technologies and tools like docker, ros-noetic, pyqt, and improve cmake skills.

Overall I also gained more experience reading code from other contributors, since this is essential to keep contributing this and other open source projects.

Summary of contributions to Jderobot

Jderobot base

- Missing libraries in the README for source installation.

- Upgrade to Ubuntu 20.04 Focal Fossa

- Added missing libraries to README and corrected typos

Jderobot custom robots

Jderobot Academy

- Adding environment setup instructions for both base and assets

- Adding environment setup in the installation page

- Corrected some typos in the AutonomousCars guide

Jderobot Behavior Studio

- No module named PyQt5.QtWidgets (python2.7)

- Core dump when loading a brain which saved model cannot be found.

- Update noetic installation guide to support python3.7

- Gym Gazebo for noetic

- [noetic-devel] Error message in logs while using gazebo and behavior studio in docker container

- [noetic-devel] Add docker image with CUDA 11 in ubuntu 20.04

- [Noetic-devel] Improve Dockerfiles documentation

- [Noetic-devel] Setting hyperparameters from config.yml

- [noetic-devel] update dqn code

- [noetic-devel] Update Dockerfiles to use Jderobot’s Behavior studio directly

- Noetic devel

- Upgrading brains to Python3

- adding installation guide for noetic-devel branch

- Adding virtualenv

- Gym gazebo for noetic

- Adding support for RL

- Issue 64

- New image with CUDA11 in ubuntu focal

- minor changes for qlearn

- Updating all dockerfiles with behavior studio repository

- Issue 69

- updating documentation for rl

Further work

There is still a lot of work to be done and Behavior Studio is a new project that is very exciting, hence I will try my best to keep contributing regularly to Behavior Studio.

I would like to keep adding new Reinforcement Learning algorithms and add new environments to make the platform more diverse, currently only line following is working, so it would be interesting to have a obstacles in the environment those obstacles could be dynamic or static, and just like that there are many good direction for this project.

Acknowledgment

Taking part in this GSOC was a wonderful experience and I want to particularly thank my mentors Sergio, David and the Behavior Studio team for their helpful advises, discussions and support. I learned lots new things and deepen my knowledge in robotics, thanks to the Jderobot for giving me this opportunity to learn more about the organization and letting me contribute to this project.

References

- [1] Jderobot, BehaviorStudio (BehaviorSuite)