Coding period week 2

Week 2

This week I focused on two main things getting the behavior studio working inside my docker container and finishing up the DQN in the breakout environment.

I tried running it with python 2 as it is indicated in the installation guide, but PyQt5 seems not easy to setup along with python 2.7, I tried to the installation porting the code to python3, which I described the process in full length below. In the weekly discussion with the Behavior Studio team it was decided that the best approach would be to migrate Jderobot-base to support Focal Fossa which implies updating internal libraries to ROS-Noetic. So in the next week I will help porting the docker files to match the new updates in Jderobot-base.

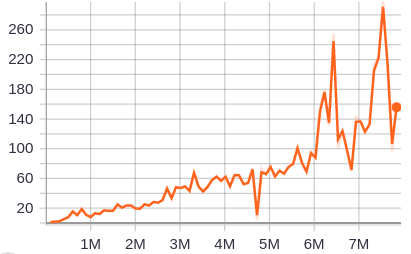

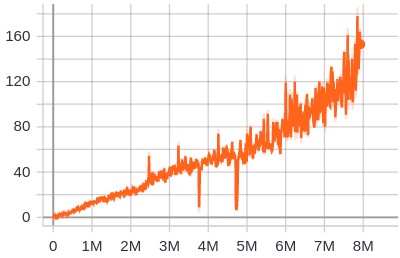

At the same time I had the dqn improved and being trained in the breakout environment, it took around three days with my setup for the agent to get more than 250 points in the game, around 8 million frames or 2 million steps.

Running Behavior Studio with Python 3

I described the steps I followed in order to run Behavior Studio using python3, First all the Jderobot tools, assets, and ROS Melodic must be installed the the installation guide [2]

Installation

First I created an environment to easily take control of the packages installed.

virtualenv -p python3 .bh_python3

source .bh_python3/bin/activate

Then I added some libraries which were missing and increased the version required for numpy and PyQt5 in the requirements.txt.

numpy==1.16.0

PyQt5==5.15.0

rospkg

pathlib

PyQt3D

All necessary libraries can be installed to our virtual environment by running:

python install -r requirements.txt

To run the Behavior Studio’s GUI, first we need to create a configuration file in next to the driver.py file, I created mine under the name of default.yml.

Behaviors:

Robot:

Sensors:

Cameras:

Camera_0:

Name: 'camera_0'

Topic: '/F1ROS/cameraL/image_raw'

Pose3D:

Pose3D_0:

Name: 'pose3d_0'

Topic: '/F1ROS/odom'

Actuators:

Motors:

Motors_0:

Name: 'motors_0'

Topic: '/F1ROS/cmd_vel'

MaxV: 3

MaxW: 0.3

BrainPath: 'brains/f1/brain_f1_opencv.py'

Type: 'f1'

Simulation:

World: /opt/jderobot/share/jderobot/gazebo/launch/f1_1_simplecircuit.launch

Dataset:

In: '/tmp/my_bag.bag'

Out: ''

Layout:

Frame_0:

Name: frame_0

Geometry: [1, 1, 2, 2]

Data: rgbimage

Frame_1:

Name: frame_1

Geometry: [0, 1, 1, 1]

Data: rgbimage

Frame_2:

Name: frame_2

Geometry: [0, 2, 1, 1]

Data: rgbimage

Frame_3:

Name: frame_3

Geometry: [0, 3, 3, 1]

Data: rgbimage

By running the GUI I get this error, which I address in the next section.

python driver.py -c default.yml -g

Traceback (most recent call last):

File "driver.py", line 22, in <module>

from pilot import Pilot

File "/home/eisen/pull_requests/forked_behavior_studio/behavior_suite/pilot.py", line 22, in <module>

from utils.logger import logger

File "/home/eisen/pull_requests/forked_behavior_studio/behavior_suite/utils/logger.py", line 5, in <module>

from colors import Colors

ModuleNotFoundError: No module named 'colors''

Porting to Python3

Imports

The first error we got is because in Python 3 uses absolute imports [1]. The following call:

from colors import Color

Should be

from utils.colors import Color

Because the absolute path where driver.py is ran starts in behavior_suite directory.

├── behavior_suite

│

└── utils

├── colors.py

├── configuration.py

├── constants.py

├── controller.py

├── environment.py

├── __init__.py

├── logger.py

Missing log directory.

FileNotFoundError: [Errno 2] No such file or directory: '/behavior_studio/behavior_suite/logs/log.log'

For now I just created it manually.

mkdir logs

touch logs/log.log

Subprocess

the function checkout_out does not return a string directly that is why we are having this error.

Traceback (most recent call last):

File "driver.py", line 190, in <module>

main()

File "driver.py", line 158, in main

environment.launch_env(app_configuration.current_world)

File "/home/eisen/pull_requests/forked_behavior_studio/behavior_suite/utils/environment.py", line 39, in launch_env

close_gazebo()

File "/home/eisen/pull_requests/forked_behavior_studio/behavior_suite/utils/environment.py", line 56, in close_gazebo

ps_output = subprocess.check_output(["ps", "-Af"]).strip("\n")

TypeError: a bytes-like object is required, not 'str'

It can be fixed by decoding the output before doing the strip.

ps_output = subprocess.check_output(["ps", "-Af"]).strip("\n")

ps_output = subprocess.check_output(["ps", "-Af"]).decode('utf-8').strip("\n")

Unused package

I could not find the implementation of resource, and It was not being used in the script so I just commented it.

cannot import name 'resources'

#from ui.gui.resources import resources

OpenCV2

File "/opt/ros/melodic/lib/python2.7/dist-packages/rospy/topics.py", line 750, in _invoke_callback

cb(msg)

File "/home/eisen/pull_requests/forked_behavior_studio/behavior_suite/robot/interfaces/camera.py", line 63, in __callback

image = imageMsg2Image(img, self.bridge)

File "/home/eisen/pull_requests/forked_behavior_studio/behavior_suite/robot/interfaces/camera.py", line 26, in imageMsg2Image

cv_image = bridge.imgmsg_to_cv2(img, "rgb8")

File "/opt/ros/melodic/lib/python2.7/dist-packages/cv_bridge/core.py", line 163, in imgmsg_to_cv2

dtype, n_channels = self.encoding_to_dtype_with_channels(img_msg.encoding)

File "/opt/ros/melodic/lib/python2.7/dist-packages/cv_bridge/core.py", line 99, in encoding_to_dtype_with_channels

return self.cvtype2_to_dtype_with_channels(self.encoding_to_cvtype2(encoding))

File "/opt/ros/melodic/lib/python2.7/dist-packages/cv_bridge/core.py", line 91, in encoding_to_cvtype2

from cv_bridge.boost.cv_bridge_boost import getCvType

OpenCV was build for melodic with python 2.7, thankfully is possible to extend the environment with opencv for python 3. [2]

sudo apt-get install python-catkin-tools python3-dev python3-catkin-pkg-modules python3-numpy python3-yaml ros-melodic-cv-bridge

mkdir catkin_workspace

cd catkin_workspace

catkin init

# Is possible to change the python version

catkin config -DPYTHON_EXECUTABLE=/usr/bin/python3 -DPYTHON_INCLUDE_DIR=/usr/include/python3.6m -DPYTHON_LIBRARY=/usr/lib/x86_64-linux-gnu/libpython3.6m.so

catkin config --install

git clone https://github.com/ros-perception/vision_opencv.git src/vision_opencv

apt-cache show ros-melodic-cv-bridge | grep Version

Version: 1.13.0-0bionic.20200320.133849 # change to your version accordingly

cd src/vision_opencv/

git checkout 1.13.0

cd ../../

catkin build cv_bridge

source install/setup.bash --extend # or use setup.zsh

Integer division

In python2 / returns an integer, but in python3 / returns a float in the division is not exact, so in the code where is used the opencv library we need to change / to // to get an integer.

Branch

The updates can be found in this branch

Breakout results

New results were achieved increasing training steps, and updating the normal DQN, and match the hyperpameters with Mnih et al., 2013 and Mnih et al., 2015, besides that I tested new implementations in the tensoflow agents and the newly open source acme library from deepmind.

The code still needs documentation and reorganization of the functions and parameter handling in order to make easy to follow, the next step in to learn using the Formula 1 environment from Jderobot in gazebo.

Tensorboard graphs

The following graphs shows the progress the model did in 8 million steps,

Week Highlights

- Played around the behavior studio GUI and TUI, made it work for python3 refactoring the codebase.

- Met with mentors and the behavior studio team, to discuss next steps to improve the behavior studio environment.

- Tried a new implementation DQN, and also tried more steps which gave good results.

- I been working around this issues and pull requests.

- Issue # 24 in Behavior Studio No module named PyQt5.QtWidgets (python2.7)

- Issue # 19 in Behavior Studio DRL Cartpole example

- Issue # 1396 in Jderobot Base Upgrade to Ubuntu 20.04 Focal Fossa

- Pull request in Keras docs PR 83

- Pull request in Tensorflow agents docs PR 391

References

-

[1] PEP Python documentation

-

[2] Jderobot, BehaviorStudio (BehaviorSuite)

-

[3] Jderobot, [Base](https://github.com/JdeRobot/base

-

[4] Mnih et al., 2013, Playing Atari with Deep Reinforcement Learning

-

[5] Mnih et al., 2015, Human-level control through deep reinforcement learning