Week 9

Now that the exercise was up and running both independently and through the docker container, it was time to implement the GPU inference feature, which we previously couldn’t implement due to an issue with the onnxruntime-gpu library. The issue revolved around switching between onnxruntime and onnxruntime-gpu. Both of them had the same import name. If both were installed, onnxruntime-gpu was imported by default. We needed both dependencies installed in our application’s docker image for both CUDA and non CUDA users of the application. This limitation would only import the GPU version every time the application runs and give error for non CUDA users as onnxruntime-gpu required the CUDA toolkit and CUDA runtime library to be installed. Basically I wanted the option to choose between onnxruntime and onnxruntime-gpu library. I had raised an issue for the same earlier (#7930).

Last week I was updated on the same by one of the developers there stating that now both CPU and GPU inference can be performed by the onnxruntime-gpu library itself and no CUDA specific files would be required for CPU specific inference. This means that the onnxruntime-gpu library could now be used for both CPU and GPU inference. However, it turns out importing the onnxruntime-gpu library still requires the CUDA toolkit and CUDA runtime library to be installed as a prerequisite even for CPU inference, which obviously doesn’t make sense. The problem with this was that even importing the onnxruntime-gpu(import onnxruntime) without the required CUDA related files, showed an error. Whereas logically the error should only occur when explicitly inferring on GPU without the required files. Anyways, as of now I have raised a follow up issue for the same, hoping that the developers finally fix the issue.

Since the docker image would anyhow require the CUDA files to be installed for the CUDA users, I decided to install the required dependencies(CUDA toolkit and CUDA runtime library) in my local machine and try importing and using the onnxruntime-gpu library. Like I said before, I don’t have a CUDA supported GPU in my laptop, but still would have to install the required CUDA files even if I want to infer on CPU using the onnxruntime-gpu library.

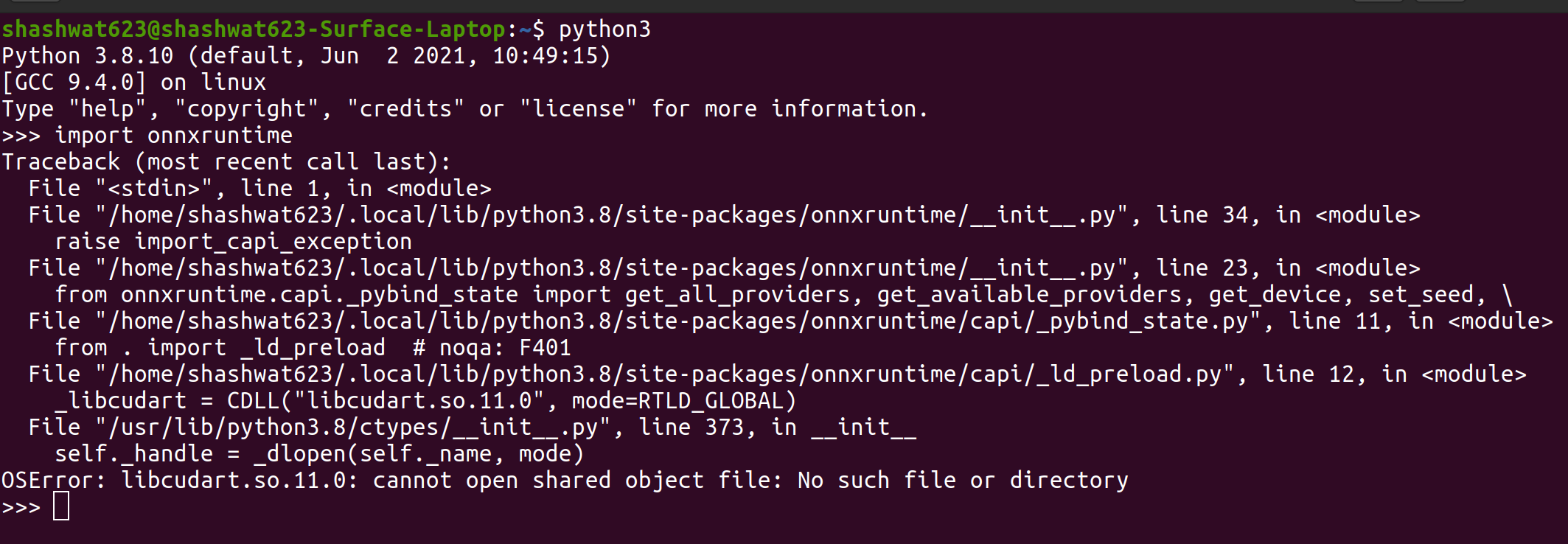

- Installed CUDA 11 toolkit - Installing this solved the following error occurring while importing the onnxruntime-gpu library.

-

Edited

.bashrcfile to add path for the binary and library files of the CUDA toolkit. -

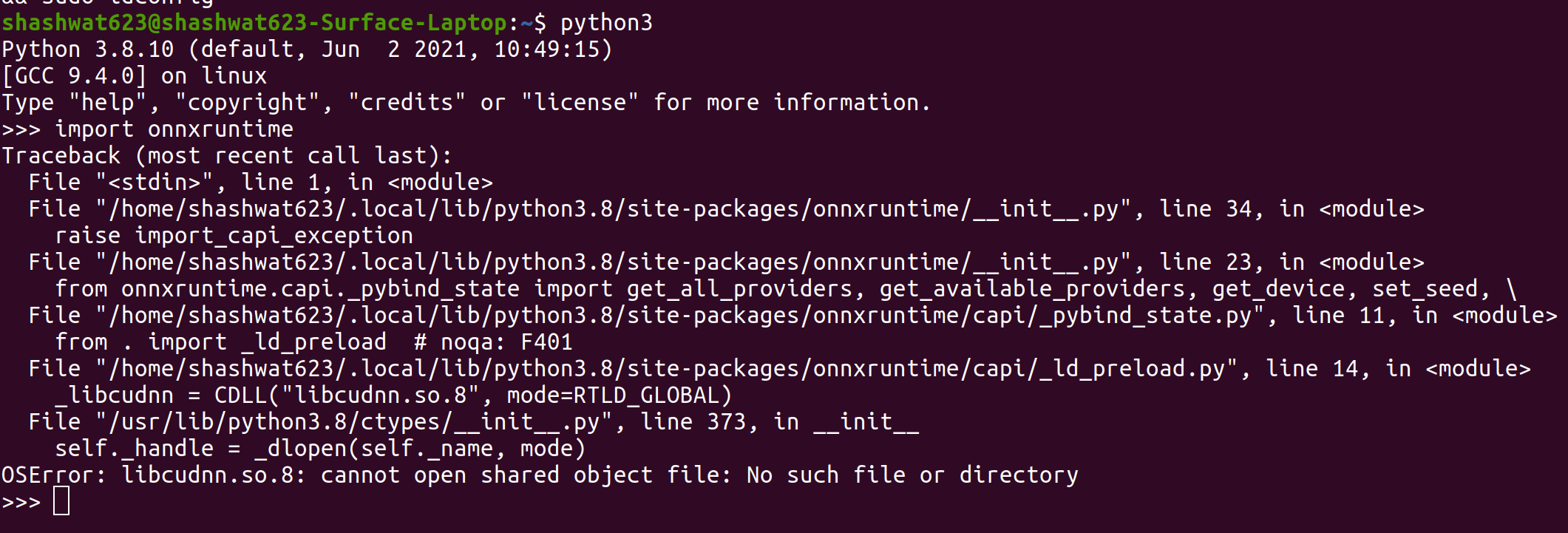

Installed the supported CUDNN runtime library in accordance to the installed CUDA toolkit version - Installing this solved the following error occurring while importing the onnxruntime-gpu library after the Cuda toolkit was installed.

I was successfully able to import and make use of the onnxruntime-gpu library after this.