Week 10

Although, I was successfully able to import the onnxruntime-gpu package, creating an inference session object gave the following error:

Error: CUDA driver version is insufficient for CUDA runtime version

Note - While creating the inference object, I had to specify the execution providers as “CPU”, as currently my machine doesn’t have a Nvidia GPU.

ExecutionProviders_list = ['CUDAExecutionProvider', 'CPUExecutionProvider'] if torch.cuda.is_available() else ['CPUExecutionProvider']

sess = rt.InferenceSession("Demo_Model.onnx", providers= ExecutionProviders_list)

Now, the above error was because the Nvidia display drivers which come along with the cuda 11.0.3 toolkit were somehow not supported with the cuda runtime library, which also comes along with the cuda toolkit. The cuda docs mention something similar for this, which can be found HERE

CUDA 11.0 was released with an earlier driver version, but by upgrading to 450.80.02 driver as indicated, minor version compatibility is possible across the CUDA 11.x family of toolkits.

Since the cuda toolkit version I had downloaded was 11.0.3(which comes with the same driver as 11.0), I had to upgrade the driver version to 450.80.02. After this I was successfully able to perform inference on CPU. By changing the execution providers to “CUDAExecutionProvider” in the code, one could perform inference on the GPU instead.

Since all these dependencies would have to be put in a docker image, it was essential to find a cuda toolkit version which comes with the right driver version, cutting the need to install the right driver separately. Luckily, cuda toolkit 11.4 did the job for me:

- Uninstalled cuda toolkit 11.0.3

- Uninstalled the separately installed driver

- Installed cuda toolkit version 11.4

Now, that we had made sure the inference supportability for both GPU and CPU only machines with the onnxruntime-gpu package, it was time to find a docker image which contains all the required dependencies(CUDA>=11.1 and CUDNN).

I was able to find one at the official onnxruntime repo here, but the latest version used there was cuda10.2 and onnxruntime-gpu version used was 1.6. My requirement was cuda >= 11.1 and onnxruntime-gpu version >=1.8.1. I raised a question there regarding the same:

Docker container for onnxruntime-gpu #8704

Searching for another source for the docker image, I came across the official Nvidia cuda container images Here.

nvidia/cuda:11.4.1-cudnn8-runtime-ubuntu20.04 image seemed to be the perfect fit as it contained both CUDA and CUDNN with the versions we needed.

Before heading forward with this image, I would also need to install the Nvidia Container Toolkit as it was clearly mentioned in the documentations here. It mentions The NVIDIA Container Toolkit for Docker is required to run CUDA images.

The NVIDIA Container Toolkit allows users to build and run GPU accelerated Docker containers. The toolkit includes a container runtime library and utilities to automatically configure containers to leverage NVIDIA GPUs. This would basically provide the running cuda container to access the Nvidia drivers in the host machine and subsequentlly the GPU.

Of course, as mentioned here, having the supported Nvidia drivers pre-installed in the host machine was a pre-requisite.

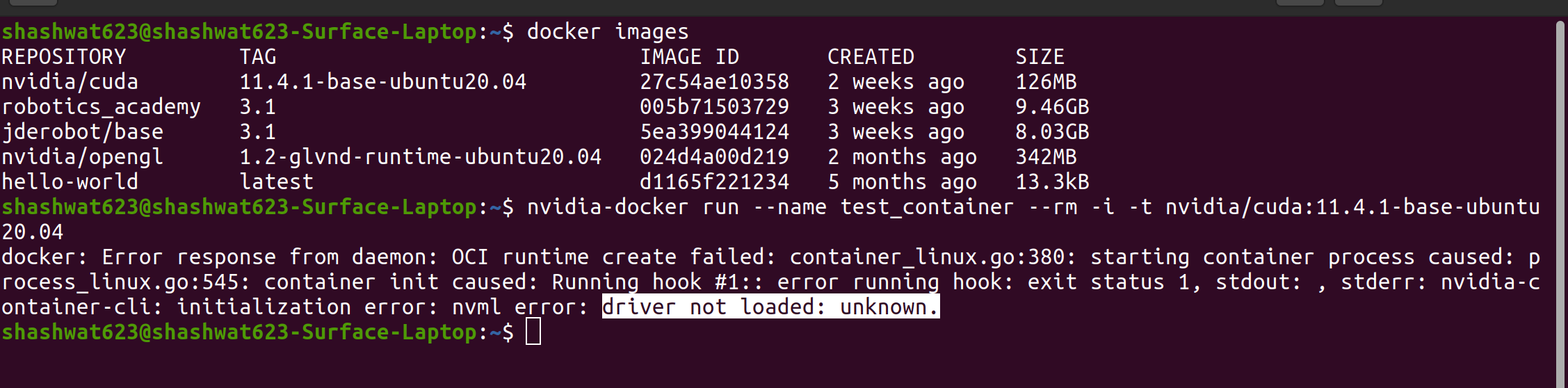

Since I was using a laptop with no Nvidia card, the Nvidia container toolkit wasn’t able to access/bridge the installed nvidia drivers from the host machine to the running container.

Seems like we would have to use separate images for CPU and GPU users after all. I have planned to work more on this once I have access to a Nvidia GPU enabled machine, as adding GPU inference features from a non-gpu machine is quite ambiguous and there isn’t a workaround for everything :( I’ll get back to this later.

Lastly, I decided to go ahead with cleaning up the code base of the exercise. It was necessary because I had built the exercise on top of pre-existing exercises, adding and changing the code, template, and features where ever necessary. This also meant that there was some code in the front and backend of the exercise which belonged to the other Robotics exercises, which was not needed in the human-detection exercise. I decided to spend the last few days going through and cleaning up the code-base to remove unnecessary code.