Week 1: May 29 ~ June 04

Preliminaries

The start of the official coding period for GSoC is here! In Monday’s meeting, we revisited the logistics and strategized the initial steps for implementing the data collection tool. Our approach is grounded in simplicity; we begin with basic scenarios and gradually integrate more complexity. Here are a few elementary scenarios we considered:

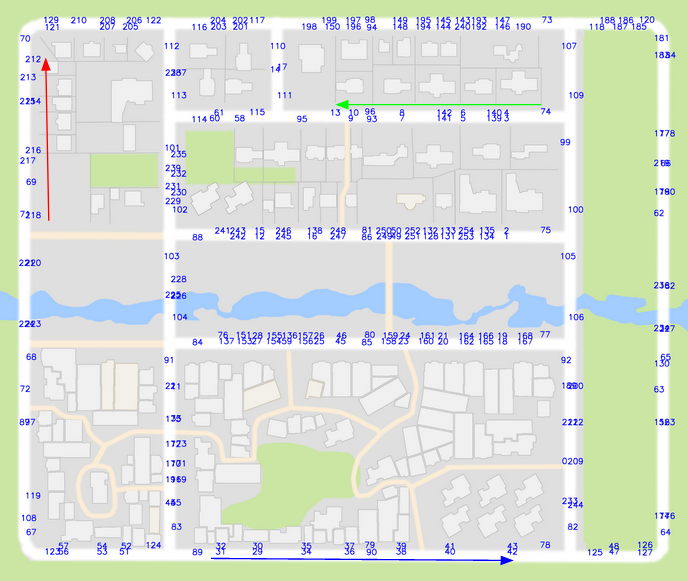

- Straight routes with no intersections or turns, as marked by red, green, and blue arrows in the image below, where other vehicles serve as dynamic obstacles.

- Straight routes with no intersections or turns, but with a traffic light and a pedestrian crossing.

- Straight routes with no intersections or turns, but with a parked car partially obstructing the lane, demanding the ego vehicle to circumvent the obstacle without changing lanes.

While we would like the route to be simple in the beginning, it is crucial to introduce dynamic obstacles proximate to the ego vehicle to foster its learning of obstacle avoidance. In addition, we decided the implementation of the data collection tool should be an iterative process, allowing us to test the data samples at each stage with incrementally more sophisticated models.

Objectives

- Get started with the data collection tool and collect some sample data

- Delve into the simulator to understand how to configure sensors, extract data, and spawn pedestrians crossing roads

- Check out Deep Learning Studio[1] and review existing models

- Train a small model on simplest scenarios if time permits

Execution

Data Collection Tool

The first step of the execution was implementing a data collection tool. This tool randomly samples straight routes with no intersections from a list of start and target locations provided in a .txt file. For each episode, the program reloads the traffic, spawns the ego-vehicle at the start location, and utilizes CARLA’s BehaviorAgent to drive it to the target location. For each frame, the RGB camera image, semantic segmentation, current speed measurement, and the controls (throttle, steer, brake) are recorded and saved in .pkl format.

For sample data I collected 100 episodes, totalling ~32000 frames.

Model

Simultaneously, I explored the Deep Learning Studio[1] and reviewed several existing models. From this exploration, I modified the DeepestLSTMTinyPilotNet to accept a 4D image input (RGB+Segmentation) and concatenate the flattened features with the current speed measurement. The output of the model is a vector of (throttle, steer, brake).

Below is the architecture of the modified DeepestLSTMTinyPilotNet:

DeepestLSTMTinyPilotNet(

(cn_1): Conv2d(4, 8, kernel_size=(3, 3), stride=(2, 2))

(relu_1): ReLU()

(cn_2): Conv2d(8, 8, kernel_size=(3, 3), stride=(2, 2))

(relu_2): ReLU()

(cn_3): Conv2d(8, 8, kernel_size=(3, 3), stride=(2, 2))

(relu_3): ReLU()

(dropout_1): Dropout(p=0.2, inplace=False)

(clstm_n): ConvLSTM(

(cell_list): ModuleList(

(0): ConvLSTMCell(

(conv): Conv2d(16, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

)

(1): ConvLSTMCell(

(conv): Conv2d(16, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

)

(2): ConvLSTMCell(

(conv): Conv2d(16, 32, kernel_size=(5, 5), stride=(1, 1), padding=(2, 2))

)

)

)

(fc_1): Linear(in_features=6721, out_features=50, bias=True)

(relu_fc_1): ReLU()

(fc_2): Linear(in_features=50, out_features=10, bias=True)

(relu_fc_2): ReLU()

(fc_3): Linear(in_features=10, out_features=3, bias=True)

)

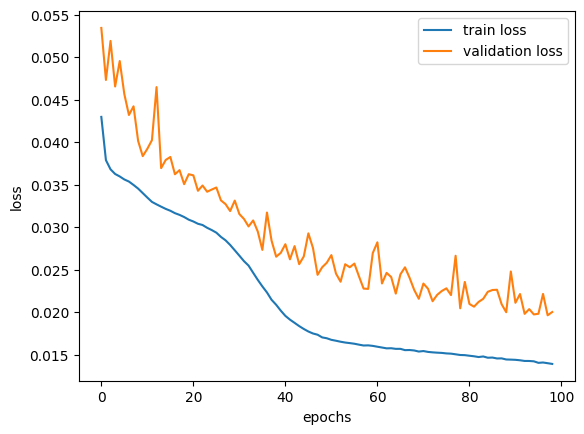

The figure below shows the training and validation loss over 100 epochs.

Results

I evaluated the performance of the model by deploying it in the CARLA simulator and observing its behavior. As shown in the video below, there are several problems: (1) the vehicle is hitting brake even when there is not obstacle in front of it (2) the vehicle steers to the left instead of driving a straight trajectory

References

[1] Deep Learning Studio: https://github.com/JdeRobot/DeepLearningStudio

Enjoy Reading This Article?

Here are some more articles you might like to read next: