Week 2: June 5 ~ June 11

Preliminaries

During Monday’s meeting, we discussed last week’s progress on developing the data collection tool and our initial analysis of the model, which was trained on the preliminary dataset. Nikhil highlighted an issue in my approach that I was using an array of semantic labels as the 4th channel to augment the RGB image data from the camera. He suggested a more effective method would be to transform these labels into RGB values, treating them in the same manner as the camera image for better feature learning.

Data augmentation was another important aspect of imitation learning. Our last week’s model faced a challenge in self-correction: it would swiftly leave the lane if it deviated from the center, primarily because it was never exposed to situations where the expert demonstrates steering back from a drift. To improve this, we considered augmenting our dataset to include scenarios simulating recovery from disturbances. One potential solution involves applying an affine transformation to slightly shift the images left or right and adjust the steering values accordingly. This technique would expose the agent to scenarios where images are offset to one side and the steering angle is towards the opposite direction, thus teaching it how to auto-correct if it strays from the center of the lane.

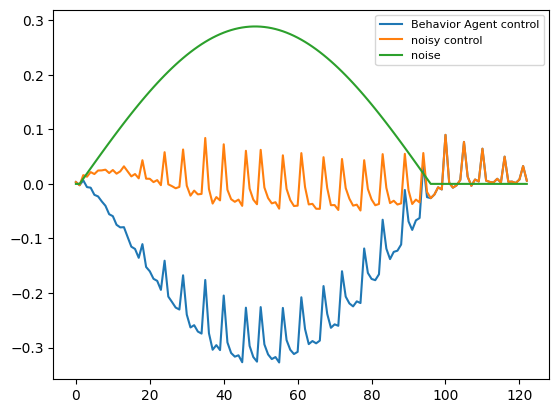

An alternative strategy involves the injection of temporally-correlated noise into the expert agent’s driving control commands. For instance, noise that prompts the car to drift to the left could be incorporated into the steering commands by the agent. While this combined signal is used for vehicle control, only the expert agent’s counteractive response to the drift-induced noise is recorded as a part of the dataset.

Given time constraints, I could only experiment with the latter approach this week. But the good news is, it proved to be quite effective! For more details, refer to the Execution section.

Objectives

- Convert semantic segmentation from labels to RGB images

- Plot out predictions vs ground truth for model evaluation

- Perform data augmentation by applying custom transformations (Did noise injection instead)

Execution

Bug Fixing

- One of the first steps taken was to convert semantic segmentation labels into RGB values, integrating the segmentation and camera image into a 6-channel model input.

- There were instances in the previously collected data where the agent overstepped the target location slightly before executing a 180-degree turn. To counter this, I reduced the time step of the synchronous mode in CARLA and also manually sifted through the episodes by monitoring the data collection process.

- There was an anomaly with the RGB images collected in the previous week. Each time step did not correctly update the images, leading to discrepancies between the synchronization of the RGB images and the semantic segmentation samples. This inconsistency stemmed from the data collector using the same reference, rather than saving a distinct copy of each camera frame.

Noise Injection

The image below shows a (half) period of sine wave injected to the steer commands from the expert agent. The agent is able to correct the drift caused by the noise by steering to the opposite direction.

As shown in the video below, the agent drifts a little bit to the left or right from time to time due to the injected noise but it is able to steer back to the center of the lane:

Demo

References

[1] Felipe Codevilla, Matthias Müller, Antonio López, Vladlen Koltun, & Alexey Dosovitskiy. (2018). End-to-end Driving via Conditional Imitation Learning.

Enjoy Reading This Article?

Here are some more articles you might like to read next: