Week 7: July 10 ~ July 16

Preliminaries

This week we continue to improve the performance of our model. One major problem we faced this week is the problem of the agent occasionally stopping and getting stuck in a state, which we call the “halting” problem. Understanding and addressing this issue became a central focus, leading us to think about the underlying circumstances and potential solutions. Furthermore, we have also explored the model’s response to traffic lights and conducted cross-town testing to understand generalization.

Objectives

- Complete midterm evaluation

- Finish training and evaluating v7.0, 7.1

- Confirm whether the model needs a larger amount of data

- Read literature on the halting problem

- Continue reading codebase of Behavior Metrics

- Integrate the existing evaluation metrics into Behavior Metrics

Execution

Current Models

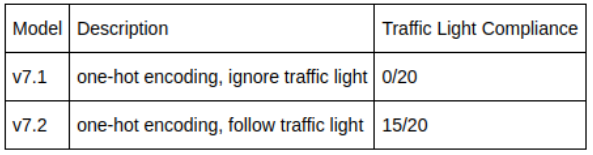

Below is a summary of our current models and their performance. As shown in the first table, using one-hot encoding vs an embedding layer for the high level command does not have a big impact on the model in terms of overall performance (How is driving score calculated?). The second table compares model v7.1 and v7.2, the former ignores the traffic light status and the latter is trained with the traffic light as an additional input to the model. The results show that just by passing the status of the traffic light (Not at traffic light, Red, Green, Yellow) as a one-hot encoding, the model is able to effectively follow the traffic light in testing.

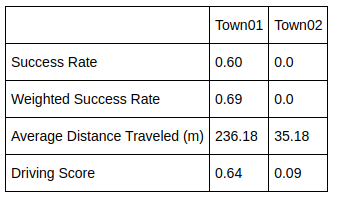

Testing in Town01 vs Town02

So far we have only been training our model in Town01 in CARLA and testing in a different town, Town02. In order to verify whether the model can benefit from a larger amount of data, we tested the model in Town01 vs in Town02. As expected, as shown in the table below, the model performs significantly worse in unseen environmenet. This indicates that if we want to improve the model’s generalization to different environments, we must consider expanding the training data to include various towns, roads, and scenarios. One of the conclusions drawn from this experiment is that a model trained exclusively on Town01 data is overfitting to that particular environment. While it may perform admirably in familiar surroundings, its performance degrades when presented with unseen or novel situations.

The “Halting” Problem

One of the persistent challenges we encountered this week is what we term the “halting” problem. This issue is characterized by the agent suddenly stopping in the middle of a task, getting stuck in a particular state and unable to continue its course. To understand this problem more deeply, we began to analyze the specific circumstances under which the halting occurs. We made the following observations:

- Although this issue can surface in a variety of situations, it is most commonly observed when the vehicle is navigating turns at intersections.

- While the vehicle is immobilized, we noted that the throttle value hovers close to 0.0, whereas the brake value often exceeds 0.9. Interestingly, when the agent perceives another vehicle passing by, it releases the brake and begins to accelerate. However, this short-lived acceleration fails to significantly alter the vehicle’s position, leaving it stagnant once more.

The image below illustrates two typical instances of the “halting” issue, where the vehicle becomes stuck without apparent cause.

Enjoy Reading This Article?

Here are some more articles you might like to read next: