Project Introduction

The goal of this project is to develop a set of robot manipulation exercises with incremental complexity for JdeRobot Robotic Academy users. With these exercises, users can gain a better understanding of the processing of a manipulation task which mainly includes perception, planning and control. They can also become more familiar with some popular working frameworks in robotics: ROS, Gazebo, MoveIt, and some well-known open-source computer vision libraries: OpenCV and PCL.

Initial Goal and Progress

The initial goal for this project is to release four exercises: classical pick and place tasks without and with vision assistance, picking from warehouse racks with an industrial mobile manipulator, cooperation between AGV and fixed manipulator to pick objects from a conveyor belt and delivery to the target position.

However, considering the limitation and difficulties related to manipulation simulation we found in last three months, we finally decide to develop three industrial robotics exercise during GSoC period. The details of final results can be seen in following sections.

Contributions

Three new industrial robotics exercises are released in JdeRobot Robotic Academy: Pick and Place, Machine Vision and Mobile Manipulation.

Documentation: User manual of three exercises, including introduction, installation guidance, API and GUI introduction, theory, hints and solution demonstration videos

Update in Industrial Robot Repo:

assets: worlds and Gazebo modelsindustrial_robots: robot simulation packages,rqt_industrial_robots: rqt-based GUI for three exercise

Update in JdeRobot Robotics Academy Repo: worlds, launch files, script files of three exercises

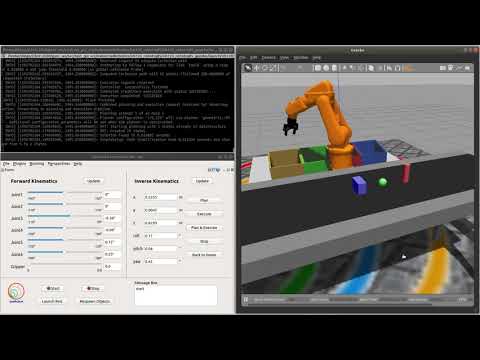

Exercise one: Pick and Place

Task: Pick objects from a conveyor and place them to the box with the same color. Objects and Obstacles shape, color and pose are known.

Robot: IRB120

Gripper: robotiq85(two-finger mechanical gripper)

Sensor: None

Tutorial

Domonstration Video:

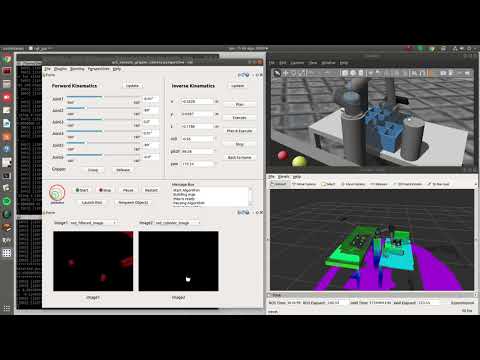

Exercise two: Machine Vision

Task: Pick objects from a conveyor and place them to a box. Objects shape, color are known. The pose of objects should be detected using color and shape filter. The obstacles should be detected from point cloud.

Robot: UR5

Gripper: vacuum gripper(self-made)

Sensor: kinect camera

Tutorial

Domonstration Video:

Exercise three: Mobile Manipulator

Task: Pick objects on a conveyor and place them to trays on other conveyors. Objects and Obstacles shape, color and pose in world frame are known.

Robot: MMO-500(mobile manipulator from Neobotix, combining AGV and UR5)

Gripper: Modular Grasper(three-finger mechanical gripper from Shadow Robot Company)

Sensor: laser

Tutorial

Domonstration Video:

Future work

I still plan to keep working for JdeRobot Academy to improve the industrial robot exercises. Something that can be considered to do as next step for industrial robotics exercises are list as follows.

-

Improve grasping stability:

Currently, I am using modifiedgazebo_grasp_fixplugin for mechanical gripper andgazebo_ros_vacuum_gripperfor vacuum gripper. The modified versiongazebo_grasp_fixplugin can slightly improve the possibility to grasp object, but we can still usually see the object oscillating while grasping. Thegazebo_ros_vacuum_gripperplugin is still unstable to use in manipulation unless grasping small and light object as it only provides force but doesn’t fix the object with the gripper asgazebo_grasp_fixplugin, so adding fixing object and gripper link functionality to this plugin might help a lot. -

More complex world and objects:

In these three exercises, objects are all single color, regular shape(box, sphere and cylinder). The setup of Gazebo world and obstacles are also simple. When the grasping, perception, planning functionalities are improved, more complex world and objects might be able to used in our future exercises which would be similar to real industrial application. -

Inculde more machine vision methods:

The method we use in machine vision exercise is color filter and shape filter in PCL which can only deals with single color and sphere or cylinder shape objects. There are actually more machine vision method can be used, such as pointcloud registration in PCL and some 2D detection method in OpenCV. -

Integration perception, navigation and manipulation:

Currently, mobile manipulation exercise only integrated navigation and manipulation because the implemented perception function is still limited. Once the perception side is ready to use, we can implment more realistic mobile manipulation tasks. -

Update to ROS2, Navigation2 and MoveIt2:

In ROS2, the navigation package Navigation2 and motion planning library MoveIt2 are both latest version. With the improved functionalities and features in these packages can probably improve the behavior of industrial robot exercises.