Convert Pointcloud to image

The pcl_ros package provides a convert_pointcloud_to_image node to directly convert Pointcloud2 message to Image message, but it requires organized pointcloud which means each point in pointcloud corresponding to one image pixel. However, what we need to convert is filtered pointcloud which only contains points related to some features: color or shape. Finally I wrote two converters to convert PointXYZRGB format pointcloud to rgb image and PointXYZ format pointcloud to depth image.

The original pointcloud from kinect camera and color-filtered pointcloud are PointXYZRGB format pointcloud. The final pointcloud from shape segmentation filter is PointXYZ format pointcloud. Currently, I run all the filter including color filter and shape segmentation filter at the same time, so it would be too slow to do all the convertions. To get a better visualiztaion result, I only convert the final version pointclouds which pass both color and shape filter to image message, and they can be visualized in our GUI. User can choose which topic they would like to see with the combo boxs.

Improve pick and place with vacuum gripper

Improve vacuum gripper

There were two issues with the vaccum gripper: limited force and easily to slip out when the robot arm moves too fast. For the first issue, I added some grippers and tried to increase the force, but there is still not obvious improvement. For the second issue, I rotates some grippers to provide more horizontal forces on the grasped object. With this method, the robot arm can move with a reasonable speed(speed in last week is too slow) when the end effector is vertical to the ground or when the path is smooth and orientation angle is not too big. However, when the arm suddenly change the moving direction which happens a lot, the objects will still drop off the gripper.

Improve motion planning

Last week I added some joint constraints to limited the orientation of the end effector. It can helps a little. At least we won’t get some crazy trajectories with unusual up and down. In this week, I finally succeed in adding orientation constriants to make the end effector always vertical to the ground with small angle offset, but this constraint also make MoveIt cannot find a path in over 50% placing process. To get the planning success rate increase, I have to increase the orientation constraint tolerance. In addition, the collision avoidance functionality is also not perfect which makes the object sometimes collide with the obstacles because the distance to obstacles is too small.

I also tried some other methods and have some ideas about improving the performance.

- Changing collision model from sphere and cylnder to box can help a lot. It is what I have done last week. Without this modification, it is even hard to grasp the objects up. I also tried to make the collsion model thinner, which doesn’t help.

- Changing path planning solver might help. Trac-IK solver seems to have higher success rate in planning trajectory, but I also get much more controller failure with the path planned by this solver. These failures are still unsolveable to me.

- Wait for improvement in MoveIt or try MoveIt2 to get better planning performance. There is a related ongoing GSoC project called

Motion planning with general end-effector constraints in MoveIt. I am looking forward to the result of it. - Improve vacuum gripper plugin. If the plugin can provide a similar functionality to attach the object link to end effector link like the grasp plugin, we don’t need to worry about slipping or droping any more.

They are both possible improving methods, but requires more time to test. Considering the time limit, I would perfer to record these problems and possible solution down and move forward.

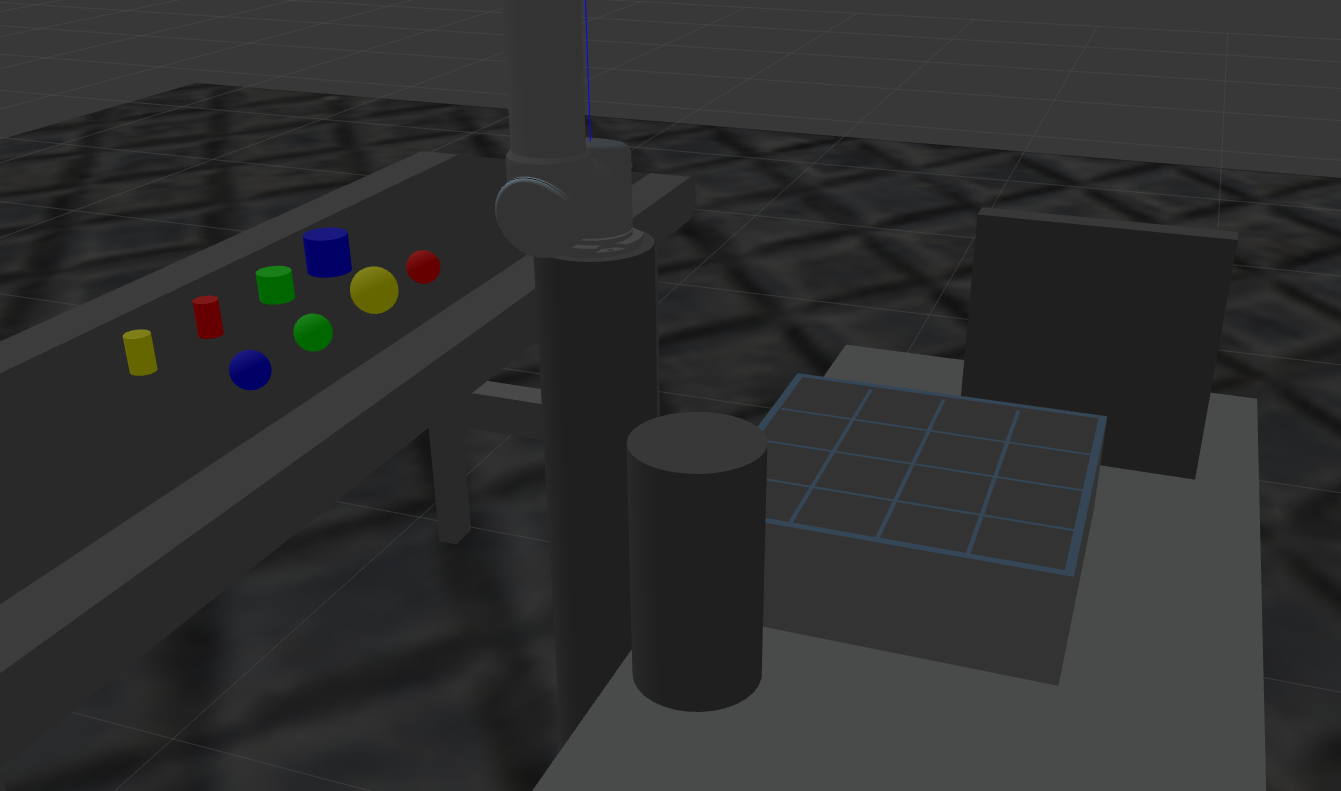

Assign obstacles

According the current behavior of the vacuum gripper, I modified the position and size of the obstacles to avoid getting path with a big up and down, but also make obstacle avoidance visualizable. With this setup, if the robot don’t know where the obstacles are, it will definitly collide with the cylinder or the side of the board. Aftering building map with obstacles, MoveIt also don’t need to plan a path to move the robot arm up to across the obstacles.

Publish exercise one to official repo

I reconfigured the code of two exercises to make MyAlgorithm.py file, which is provied for students to implement their algorithm, fully separated with other packages. Currently, the structure of industrial robot exercise is as follows.

IndustrialRobot repo:

- exercise_one

- gazebo-pkgs

- abb_experimental

- rqbotiq_b5_gripper

- irb120_robotiq85

- exercise_two

- pcl_filter

- universal_robot

- ur5_gripper_demo

- industrial_robot

- launch

- models

- rqt_industrial_robot

- resources

- scripts

- src

- perspectives

Exercise folder:

- exercise_one

- MyAlgorithm.py

- pick_and_place.py

- exercise_two

- MyAlgorithm.py

- pick_and_place.py

Inside IndustrialRobot repo, the exercise_one and exercise_two folders contains important dependency code for each exercise. The industrial_robot folder contains Gazebo models shared by exercises and final version launch file to start exercises. The rqt_industrial_robot folder contains code related to GUI.

The exercise folder and documentations of exercises will be placed with other exercises in JdeRobot Accademy repo.

Two Pull Requests have been made in these two repos to upload the first exercise: Pick and Place.