Object Detection with PCL

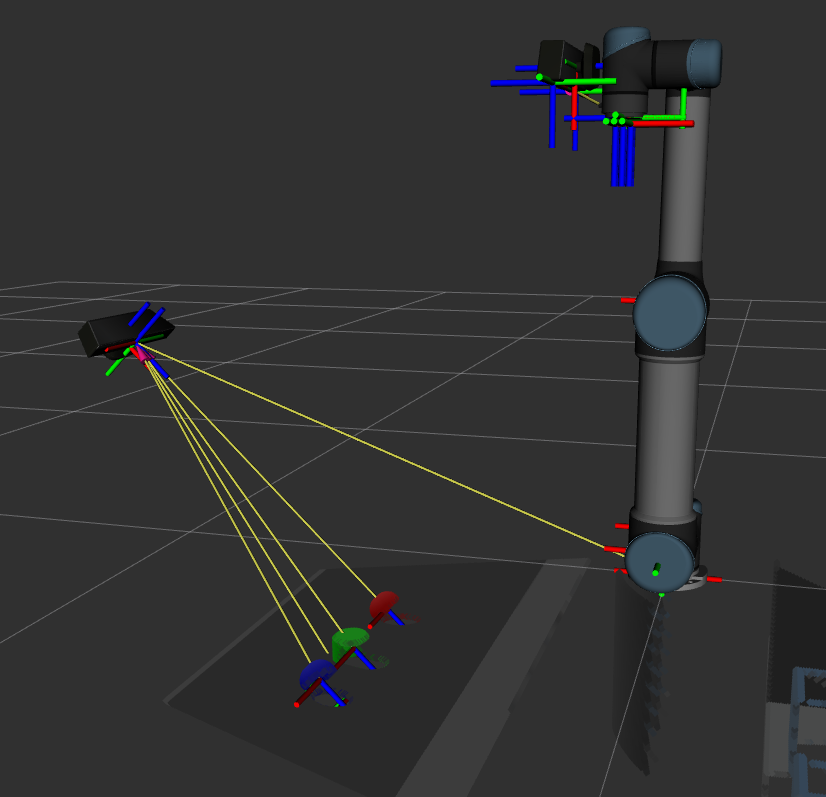

Three objects are used for detection test: a blue ball, a red ball and a green cylinder. Input point cloud is the point cloud from the fixed kinect camera. With the color filter and shape segmentation functionality in PCL, the center and size of two balls can be detected accurately(less than 1cm error). For the cylinder, the height can not be detected, but a point in the cylinder center axis will be given. The position of the center of ball and the point in cylinder axis will be broadcasted to tf. This part is implemented with C++. Then in our main python class, we can get the object position from tf.

One limitation of this detection method is that the camera should be closed to the objects in order to have more points representing the object. That is the reason why I moved the fixed kinect. If the object is smaller or the distance is farther, PCL cannot detect the sphere or cylinder from the point cloud.

Obstacle Detection and Avoidance

How to make robot and objects separated with other obstacles in the environment?

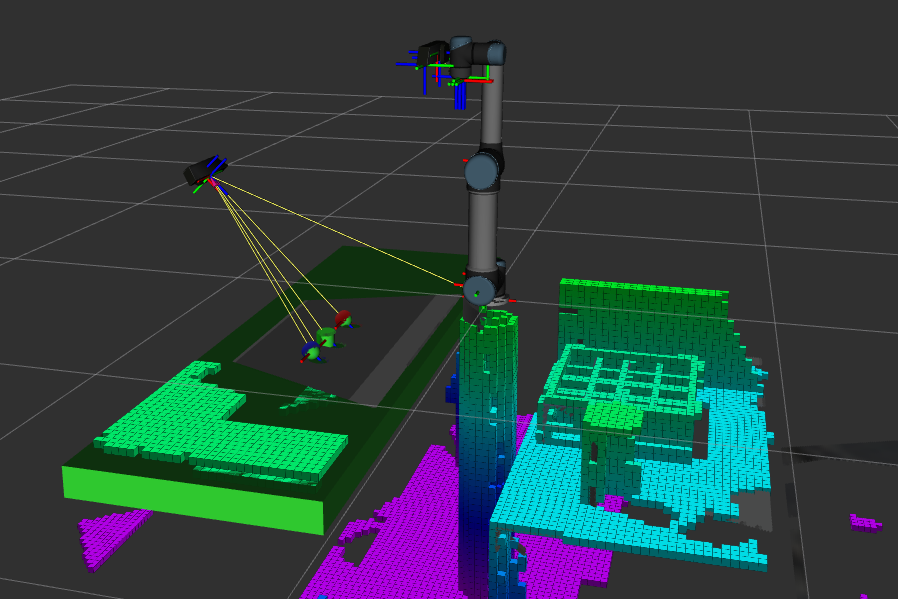

Instead of using octomap server in last week, I changed to use occupancy_map_monitor/PointCloudOctomapUpdater to update the octomap with the kinect in the end of robot arm. Obstacles will be directly added into planning scene. Robot will not be considered as obstacles with this plugin. If object models are added into planning scene, space around them will not be included in the octomap.

Will object be seen as obstacles if their models are added into planning scene?

I mentioned that object models in planning scene will be considered as obstacles, but it actually doesn’t matter because the vacuum gripper don’t need to make contact with them. A small distance is good enough.

Another advantage of adding models into plannning scene is that they can be considered into planning process. If they are not inside the planning scene and not attached to the robot, MoveIt will only consider the collision between robot and world, so object might collide with the world even if the robot arm is free of collision.

Which octomap resolution should be chosen?

Without the models, the part of octomap representing objects might be larger than the actual size which makes the gripper cannot grasp them, so high resolution is needed, but last answer shows that we can add models without any concern. With some tests, 2.5cm seems to be a good resolution value which is not be that time consuming and good enough to represent obstacles.

How to get the map of the whole surrounding environment?

Before starting picking and placing, we can rotate the robot arm to look around and build the map of surrounding environment. I tried to use the fixed kinect camera at first, but some parts of the world cannot be detected because of the limited field of view and occlusion.

Pick and Place with Vacuum Gripper

Spawning warning and error

The warning and error messages are finally removed by publishing joint state including the state of vacuum gripper joints. There are still some warnings and errors in the beginning, but after robot moving to home pose and GUI showing up, no more warnings about the state problem will pop out.

Unstable grasping

When the vacuum gripper is turned on, a force along gripper center axis will be added to the object. Because the force is limited and directional, so when the vertical force is smaller than gravity, the object will drop out. Therefore, when the gripper is not vertical to the ground or the moving speed of the robot is fast, the object cannot be keep with the gripper. To solve this problems, I added some path constraints and slowed down the moving speed when grasping objects. However, the path constraints are not super strict, otherwise, no motion plan can be found, so the objects somtimes still drop off because of the gripper orientation problem. The moving speed is also a bit too slow which take a longer time to run.

Improvement for exercise one

- keep big logo for exercise 1 and use small logo for exercise 2

- update gui when running algorithm

- add API to print message in message box

- pause button

- speed up GUI opening

- return object info separatly

- remove extra light

- place objects with bigger distance